After we install and config hadoop to run in fully distributed Mode, Centos, then we will move forward and test Hive.

Download one stable release from apache site, and decompress it.

| wget http://mirror.metrocast.net/apache//hive/stable/hive-0.6.0.tar.gz |

| tar -xvf hive-0.6.0.tar.gz cp –rf hive-0.6.0 /usr/lib/hive export PATH=$PATH:/usr/lib/hive/bin #need to setup the hadoop home variable export HADOOP_HOME=/usr/lib/hadoop |

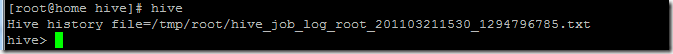

then you can run hive command shell,

the hive here is just a standard java application, here is all the arguments to start hive shell,

given the raw input like this

| "01","35004","AL","ACMAR",86.51557,33.584132,6055,0.001499 |

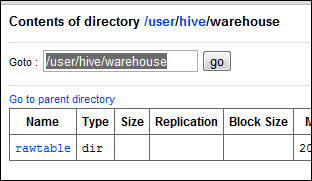

| we will create a table called rawtable first just with one column to hold all the data, using the syntax of creating table http://wiki.apache.org/hadoop/Hive/HiveQL Create table rawtable(raw string); load data inpath ‘/user/hadpoop/input’ into table rawtable;  when you run a simple query, (select count ( *) from rawtable, you can tell the mr job is invoked to get the results.  |

if you check the hdfs file systems, every table you just created will be stored under /user/hive/warehouse/[tablename]

After that, we can write quite complex query like join, subquery, check it out here, http://wiki.apache.org/hadoop/Hive/HiveQL

Besides, CLI , you can run ‘hive –service hwi’ to expose a simple web interface for data accessing, http://home:9999/hwi

Also, we can start a hive server

remote user can use the thrift api or JDBC to connect this DB powered by Hive.

1 comment:

Nice blog and well explained the installation of Hive on Hadoop.Thanks for sharing.Even I want to share some thing useful to you http://www.hadooponlinetutor.com is offering the hadoop complete hadoop videos for $20 only.The videos are really good

Post a Comment