Apache Camel is one great implementation of the EIP pattern, If you are not a seasoned Spring/Java developer , you might found it’s hard to get started, the goal of this post is simplify that process and show you how easy to get started without remember any code snippet. we will use plain console app to host and start one camel route.

to get started, simply open eclipse and create one maven project (the purpose of maven here is to add those dependency automatically), if you don’t have any maven experience, you can simply download the required jar and add to you build path.

Create one Maven project , using the simple project type, we get one project with maven ready,

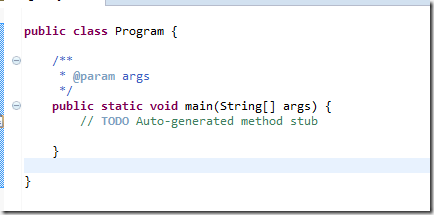

Create one simple class with main method,

Now , right click pom.xml, click maven menu, add a dependency to camel-core, you can simply search camel-core

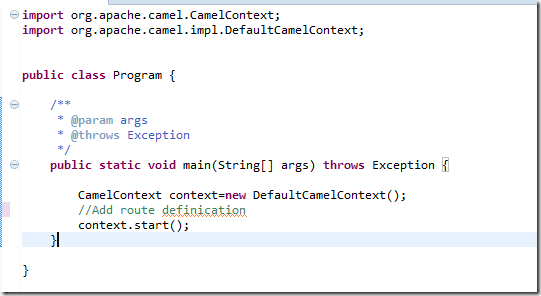

we’ve initialized the camelcontext, next step is adding our routes by xml or java code.

let’s start from java code first, since it has intelligence and life is little bit easier,

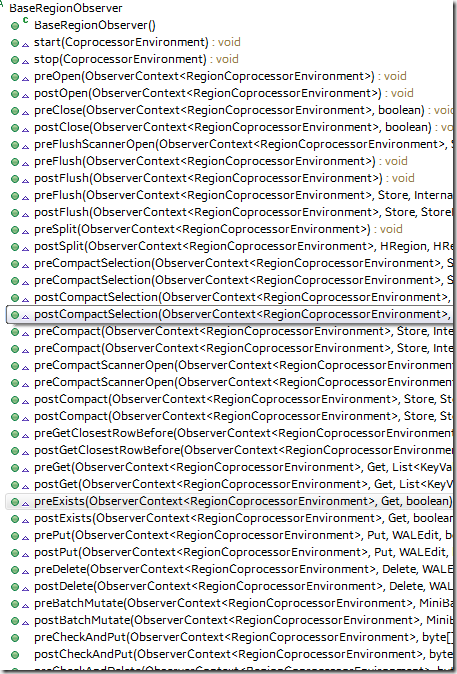

it looks like we can just add one RoutesBuilder to the context, is there any existing one, or we have to build our own.

ask the eclipse create a local variable for the routesbuilder, let’s see whether there is any existing one,

it turns out Routesbuilder is a interface, and have RoutesBuilder as an implementation, so let’s extends that one,

then the code looks like this,

in the configure , we just inject the code snippets listed on the camel component page, here is one demo, that dump out some message every 2 seconds,

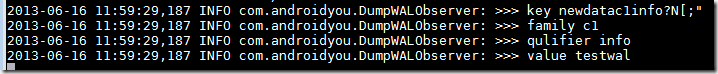

if we run this, we can see the message get shown in the console every 2 seconds.

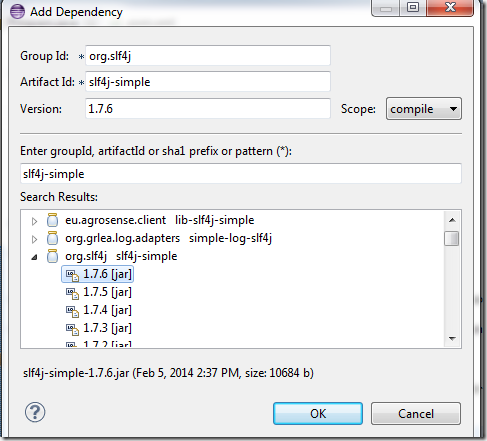

for the log component, we can’t see the message because it is NOP for slf4j by default, so lets add a slf4j-simple to the pom.xml

run again, you can see the message is listed in the console window.

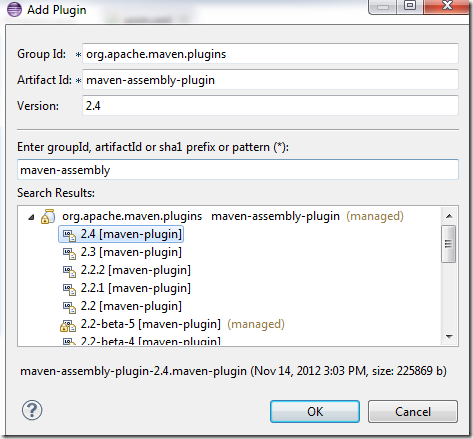

if we want to export this as a executable jar, go to the pom.xml ,right click maven menu to add a mvn-assemly-plugin

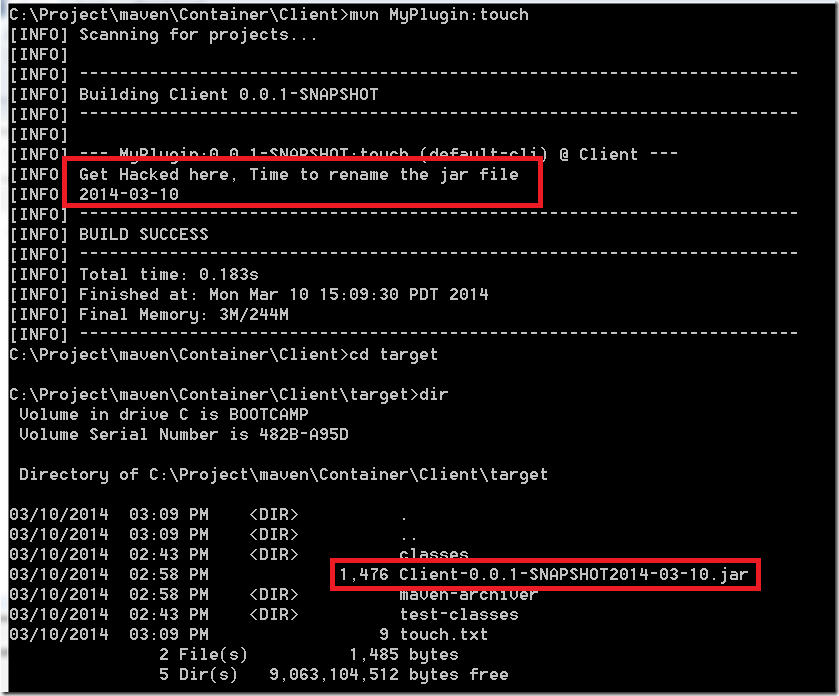

Change the pom.xml a little big , pint to the mainclass , you can copy the xml here

then go to the project folder, run mvn compile assemlby : Single

you will see a jar in the target folder,

Now, we can run the jar directly, by typing java –jar pathtothejar

for the source code, check it out here, https://github.com/ryandh/apacheCamelTutorial/tree/DemoConsole

![image_thumb[2] image_thumb[2]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOSe2kqFBXGZzECa739XChxCWELnMoQSr-4Ijc9HrjhVwZm9t6RCUo1vl3Qwi6Sqc7N9g_3UkDTt157jo4LEECsjgeLmR4s1JtJp-k9Pk3leeOLGza3npu2UKS04UDtsGiQvLRurdfIJs/?imgmax=800)

![image_thumb[3] image_thumb[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhE_rGzsBWm4Zz7sxcelRQKYwbUmJrsyS3SJ90L63IAFlDozirfmNm_G_uZJPVGM78O7jXTbubsAYnlmjDfS4OdO2l_aFvR2TXV7nDWRrd4ZY5zvosWqmtCIA69kzRfxhIt7hsYaUl3gYo/?imgmax=800)