Coherence has several incubator project, Push replication is one of them which enable you to turn on replication between several standalone clusters. i.e Cross WAN replication between data centers.

I just read their limited documentation, trying to setup one Master-Slave replication between two separate clusters in my pc. ON the master side, here is the cache configuration. basically load the default coherence config, reference the incubator pof file.

Here is just pickup the remote cluster publisher

using the distributed-scheme-with-publishing-cachestore scheme will enable the runtime to capture the entity change to a queue, then flushed to remote cluster using the remove invocation service.

All set, when I try to feed some data to the local cache which is Master, long stack trace appears.

| Map (master): put a 2 |

ON the storage Node, the error that may bring you here,

| </class-scheme>) java.lang.reflect.InvocationTargetException |

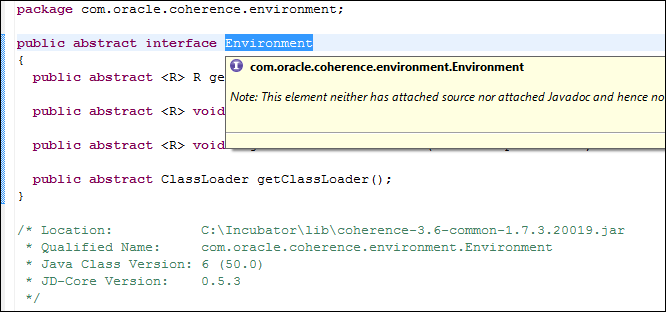

trying to cast DefautCOnfigurableCacheFactory to Envionment? what is Environment class located, in standard coherence, or Incubator project? Iet me find it out in eclipse.

It is in the common lib used by push replication,

check the class hierarchy, it’s another cachefactory,

then change our cachefactory from the default DefautCOnfigurableCacheFactory to the incubator cache facotry, it will pick up the setting like sync namespace.

Old,

then all back to normal. Hope it helps