After we install and config hadoop to run in fully distributed Mode, Centos, now it’s time to write a hello world program to run on top of the hadoop cluster in distributed mode.

I use the 1.8M text file downloaded from http://www.census.gov/tiger/tms/gazetteer/zips.txt, here is a file layout.

| "01","35004","AL","ACMAR",86.51557,33.584132,6055,0.001499 |

we will write a map reduce job to do a basic summary to list the number of citys in each State.

some configuration change,

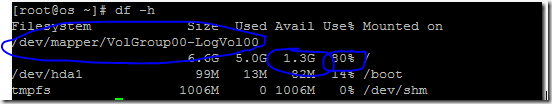

| Since the file is only 1.8M and we have two datanodes(task nodes), we will override the default block size to 500K, so that two task nodes can run at the same time. >>>hdfs-site.xml <property> |

then we use the hadoop command line to push the file to the cluster.

| hadoop fs –mkdir raw hadoop fs –copyFromLocal zip.txt raw |

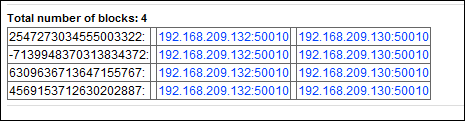

then from the name web interface, we can tell the zip.txt has 4 Blocks

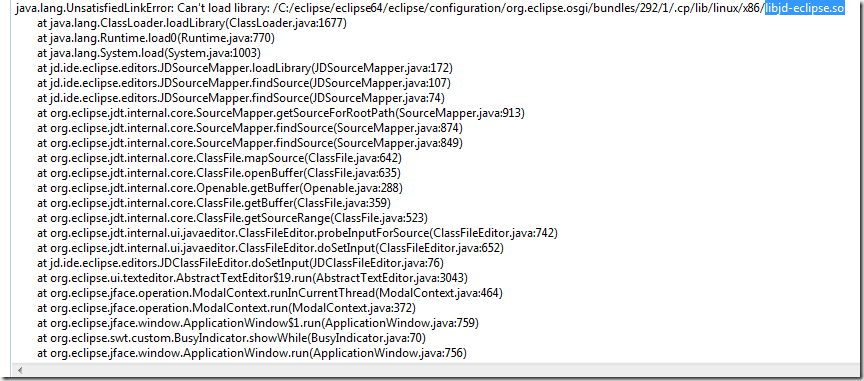

Now time to write a simple map/reduce java program. use your favorite IDE eclipse , create a java project and add the lib of those hadoo*.jar to the project builder lib. and here is my basic program. I put some comments, here

| @Override if (args != null && args.length != 2) { Configuration conf = this.getConf(); jobconf.setJobName("Hello world/City count by State"); FileInputFormat.setInputPaths(jobconf, in); jobconf.setInputFormat(TextInputFormat.class); jobconf.setMapOutputKeyClass(Text.class); jobconf.setOutputKeyClass(Text.class); JobClient.runJob(jobconf); return 0; public static void main(String[] args) throws Exception { public static class SMapClass extends MapReduceBase implements @Override String s = value.toString(); @Override long i = 0; }}} |

then click the export menu to export classes to a jar file , then copy to the name server.

run “hadoop jar helloworld.jar statecount raw/zip.txt ouputraw” on name server(job track server.)

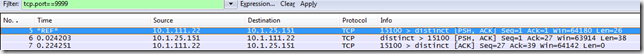

from 50030, we can see two task nodes are running

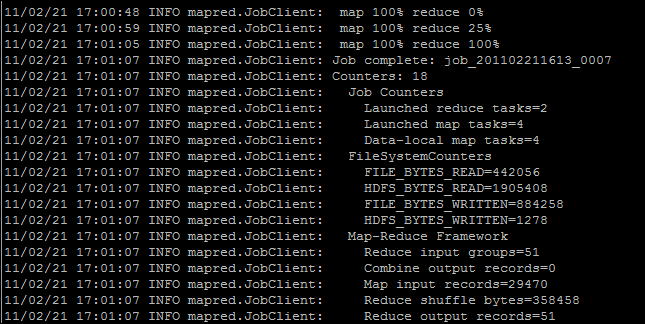

From the console you sumit job, you can see the job output.

after done, go to filesystem browser from :50070 web portal. we can see the final result.

if you want to make sure that reduce task is distributed, you can put a hostname into the reduce job and run again.

| output.collect(key, new Text("#number of citys" + i + "on host" + InetAddress.getLocalHost().getHostName() )); and assign the reduce tasks jobconf.setJobName("Hello world/City count by State"); |

in the output hdfs folder, you will see four files.

click one file, this reduce job is executed on host Home

Another one is one Host LA

More Hadoop Blogs