sometimes, you need have more than one LBs for HA purpose. and we always want to have one floatip virual IP cross all LB nodes. and we can use pacepaker/corosyncd to do this very easily. for the testing purpose, I will use Vagrant to create 3 nodes (node1, node2, node3). once booted up , all 3 machine are well figured for hostname.

nodeone= 'node1'

nodetwo= 'node2'

nodethree= 'node3'

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

#### node 1###########

config.vm.define :"node1" do |node1|

node1.vm.box = BOX

node1.vm.box_url = BOX_URL

node1.vm.network :private_network, ip: "192.168.2.101"

node1.vm.hostname = nodeone

node1.vm.synced_folder ".", "/vagrant", disabled: true

node1.ssh.shell = "bash -c 'BASH_ENV=/etc/profile exec bash'"

node1.vm.provision "shell", path: "post-deploy.sh" ,run: "always"

node1.vm.provider "virtualbox" do |v|

v.customize ["modifyvm", :id, "--memory", "1300"]

v.name = nodeone

v.gui = true

end

end

and post-deploy.sh

#!/bin/bash

value=$( grep -ic "entry" /etc/hosts )

if [ $value -eq 0 ]

then

echo "

################ hosts entry ############

192.168.2.101 node1

192.168.2.102 node2

192.168.2.103 node3

######################################################

" >> /etc/hosts

fi

once done, you can call vagrant up node1 node2 node3 to have 3 VMs ready.

now vagran ssh all nodes to install the dependencies.

yum install -y pcsd pacemaker corosync

after done, enable all daemon to start automatically.

systemctl enable pcsd

systemctl enable pacemaker

systemctl enable corosync

//disable firewalld

systemctl disable firewalld

Now time to configure the corosync for nodes topology.

you can just copy the /etc/corosync/corosync.conf.example to /etc/corosync/corosync.conf

just change your ip and quorum settings (by dfault it use mcast to maintain the cluster membership)

then create a user named haclusteruser among 3 nodes

echo you_special_compliceted_password|passwd --stdin haclusteruser

once done, you can call pcs cluster auth to authorize eacy host using the shared credential

pcs cluster auth node1

after this. start the corosync and pacemaker on all nodes.

run crm_mon to see all the nodes

now we can create a floating vip to HA purpose.

pcs resource create VIP2 IPaddr2 ip=192.168.2.100 cidr_netmask=32 nic=enp0s8 op monitor interval=30s

after that, you might see the status is always stopped. we need to disable the stonith

pcs property set stonith-enabled=false

try the check again for the status, the VIP is bound to one node (node1 here)

to test the HA, shutdown node1. and run crm_mon again, the VIP was assigned on node2

Monday, October 24, 2016

Wednesday, August 10, 2016

How to locate Magento credit card leakge

Just got a chance to help locate the leaking issues of a customer who got complaints from customer about credit card leak after made purchase.

and it turns out to be a very smart hacker. here is the steps I try to locate the issue.

I use tcpdump to capture all traffic for a couple hours and do a quick analysis to see whether there are some special. like sending out credit card using SMTP with the port 25, or just use stand http post, if it https. you can sort the TLS certificate to see any special certificate which represents some evil 3rd party hosts.

Nothing found special for me. most just traffic to this site and some 3rd party API call like shipping rate calculation, Fedex integration.

then do a analysis about the request log to see any url which has huge hit from the same IP.

you can use some handy linux command to do the aggregation.

awk '{print $8$2}' requestlog|sort|uniq -c|sort -r -n|more

nothing special, most hits are from crawlers ip with Google.

feel a little big frustrated now, this must be a smart hacker. then I watch for file change within 5 minutes. if they hacker intercept the request and store somewhere, I will definitly find out where did he/she store the file.

go the root directly of the magento, I spect all files change within 5 mintues (I placed a order using a dummy credit card"

find . -mmin -5 -ls

there we go, one file called db-tab-footer_bg.gif was changed minutes ago. there should not be any chagne for gif files. this turns out to be a complicated image file. since it's all stored with encrypted data ( it must be credit card there),

if I check the access log, not a lot request to this file, only once a week coming from the hacker's IP.

now, time to locate how can they capture the data and dump to this file.

just search all php files containing db-tab-footer_bg.gif.

it's in the global config file, here is the content. essentially they intercept all post request and encrypt using his/her RSA public key and put to the gif file. nobody can decrypt it.

now time to get ride of the backdoor and do the housekeeping to locate how did they inject the backdoor on the server

and it turns out to be a very smart hacker. here is the steps I try to locate the issue.

I use tcpdump to capture all traffic for a couple hours and do a quick analysis to see whether there are some special. like sending out credit card using SMTP with the port 25, or just use stand http post, if it https. you can sort the TLS certificate to see any special certificate which represents some evil 3rd party hosts.

Nothing found special for me. most just traffic to this site and some 3rd party API call like shipping rate calculation, Fedex integration.

then do a analysis about the request log to see any url which has huge hit from the same IP.

you can use some handy linux command to do the aggregation.

awk '{print $8$2}' requestlog|sort|uniq -c|sort -r -n|more

nothing special, most hits are from crawlers ip with Google.

feel a little big frustrated now, this must be a smart hacker. then I watch for file change within 5 minutes. if they hacker intercept the request and store somewhere, I will definitly find out where did he/she store the file.

go the root directly of the magento, I spect all files change within 5 mintues (I placed a order using a dummy credit card"

find . -mmin -5 -ls

there we go, one file called db-tab-footer_bg.gif was changed minutes ago. there should not be any chagne for gif files. this turns out to be a complicated image file. since it's all stored with encrypted data ( it must be credit card there),

now, time to locate how can they capture the data and dump to this file.

just search all php files containing db-tab-footer_bg.gif.

it's in the global config file, here is the content. essentially they intercept all post request and encrypt using his/her RSA public key and put to the gif file. nobody can decrypt it.

now time to get ride of the backdoor and do the housekeeping to locate how did they inject the backdoor on the server

Sunday, July 31, 2016

Group All established connections by target IP

here is a very common case that you want to check whether you have even connection for a distributed system on the server side. or whether the client send the requests cross all server endpoints.

Basic idea. netstat -an|grep 'EST' will show you all the established connection.

so the 4th column is the client endpoints, and 5th column is the target one.

if we want to see how many connection for each Target as a IP. you can do the following command.

netstat -an -p tcp|grep "EST"|awk '{print $5}'|awk -F "." '{print $1"."$2"."$3"."$4 }'|sort |uniq -c |sort -n -r

Basic idea. netstat -an|grep 'EST' will show you all the established connection.

so the 4th column is the client endpoints, and 5th column is the target one.

if we want to see how many connection for each Target as a IP. you can do the following command.

netstat -an -p tcp|grep "EST"|awk '{print $5}'|awk -F "." '{print $1"."$2"."$3"."$4 }'|sort |uniq -c |sort -n -r

and if we only want to see the top 5. we can put rows index for the awk hint.

netstat -an -p tcp|grep "EST"|awk '{print $5}'|awk -F "." 'FNR<6 {print $1"."$2"."$3"."$4 }'|sort |uniq -c |sort -n -r

Tuesday, May 17, 2016

Spark window function, failure: ``union'' expected but `(' found

this a very weird error when I try to run a simple window ranking, all looks good from the syntax perspective.

team=[("Lakers","WEST",29 ),("Golden State","WEST",89 ), ("MIA HEAT","EAST",79 ),("SAS","WEST",9 ), ("RAPTORS","EAST",29 ) ] sql.createDataFrame( sc.parallelize( team).map(lambda x: Row(Team=x[0],Division=x[1], Score=x[2])))\ .registerAsTable("team") print sql.sql("SELECT team, division, score, rank() OVER (PARTITION BY division ORDER BY score desc)" " as rank FROM team").take(10)

And I got this errors complaining the syntax

4j.protocol.Py4JJavaError: An error occurred while calling o36.sql.

: java.lang.RuntimeException: [1.43] failure: ``union'' expected but `(' found

SELECT team, division, score, rank() OVER (PARTITION BY division ORDER BY score desc) as rank FROM team

^

at scala.sys.package$.error(package.scala:27)

at org.apache.spark.sql.catalyst.AbstractSparkSQLParser.parse(AbstractSparkSQLParser.scala:36)

at org.apache.spark.sql.catalyst.DefaultParserDialect.parse(ParserDialect.scala:67)

at org.apache.spark.sql.SQLContext$$anonfun$2.apply(SQLContext.scala:211)

at org.apache.spark.sql.SQLContext$$anonfun$2.apply(SQLContext.scala:211)

To Fix this, please make sure you are using HiveContext instead of SqlContext

How to Run Spark testing application in your fav Python IDE

Here is a quick step to run and test your spark application using python IDE, essentially, we need load the dependency module. setup the environment and load the context.

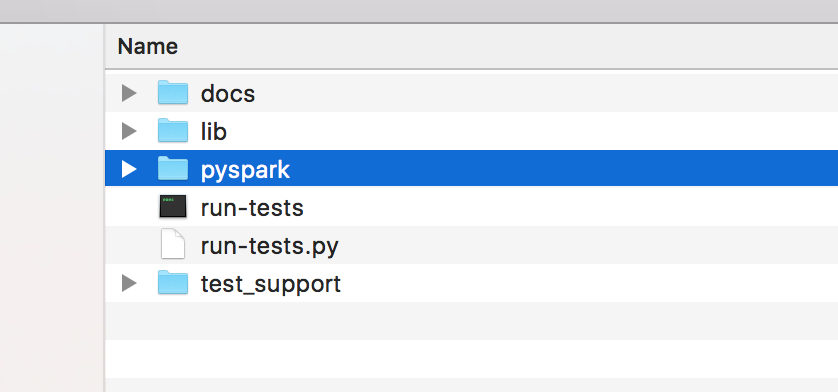

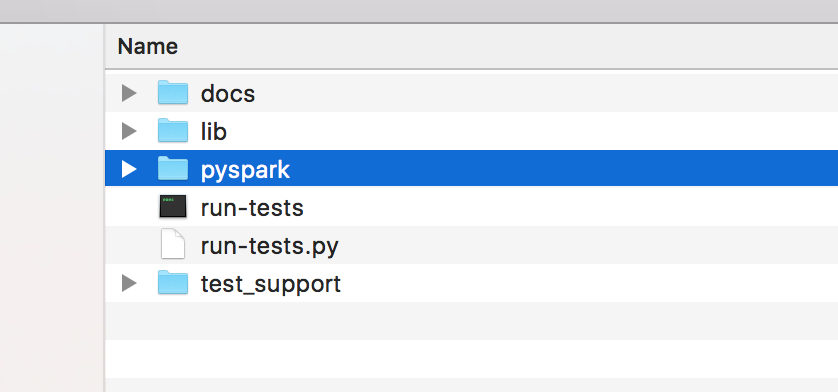

1. copy and grab pyspark folder under the standard spark distribution to your project folder

2. setup some bootstrap to take care the environments using the following code , I use 1.6.1 as an example. and you may create this as a module.

1. copy and grab pyspark folder under the standard spark distribution to your project folder

2. setup some bootstrap to take care the environments using the following code , I use 1.6.1 as an example. and you may create this as a module.

class Setup(object): def setupSpark(self): os.environ["SPARK_HOME"] = "/Users/and/Development/spark/spark-1.6.1-bin-hadoop2.6/" os.environ["PYSPARK_SUBMIT_ARGS"]="--master local[2]"; spark_home = os.environ.get("SPARK_HOME") spark_release_file = spark_home + "/RELEASE" if os.path.exists(spark_release_file) and "Spark 1.6.1" in open(spark_release_file).read(): pyspark_submit_args = os.environ.get("PYSPARK_SUBMIT_ARGS", "") if not "pyspark-shell" in pyspark_submit_args: pyspark_submit_args += " pyspark-shell" os.environ["PYSPARK_SUBMIT_ARGS"] = pyspark_submit_args sys.path.insert(0, spark_home + "/python") sys.path.insert(0, os.path.join(spark_home, "python/lib/py4j-0.9-src.zip")) return pyspark.SparkContext()

3. you are good to go

from lib.setup import Setup sc=Setup().setupSpark() print sc.parallelize(range(1,10)).count()

Subscribe to:

Posts (Atom)