one of the enhancement of Coherence 3.6 is the Coherence Query language, they call CohQL. Shall I spell CQL along with LinQ, SQL?

Before this version. you have to hardcode different filters to enable filtering which corresponds to Where clause in SQL. It will be more intuitive to write a query just like the SQL. Now it is possible in 3.6

SELECT (properties* aggregators* | * | alias) FROM "cache-name" [[AS] alias] [WHERE conditional-expression] [GROUP [BY] properties+]

Given a POJO Class PurchaseOrder with three attributes PoAmount, State, PMName.

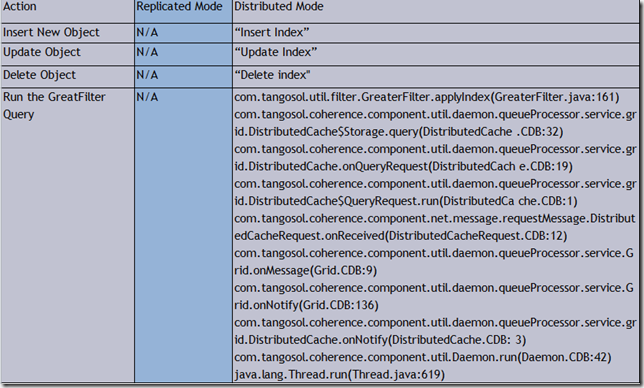

if you want to query those POs in a given state and With a least amount. you need Three Filters. might Be

| Filter gt=new GreaterFilter("getPoAmount", 7.0f); |

In 3.6, you can just put a query like the where clause syntax directly. the following code will do the same query

| Filter AdHoc=com.tangosol.util.QueryHelper.createFilter("PoAmount >7.0f AND State='CA'"); |

If you use Coherence to store a lot data for Analytics, another good news is that it comes up with a new utility like the SQL client for DB.

before, you want to run a group by State and get the average poAmount.

| EntryAggregator gp=GroupAggregator.createInstance("getState", new DoubleAverage("getPoAmount")); |

you will get some result like

| {CA=46.42647790186333, OR=51.46033203601837, WA=46.86704759886771} |

Now with the new query client. you just run a sql like group

Is it sweet? I think So.

even some management features like Backup DB

More query Syntax and support, check http://download.oracle.com/docs/cd/E15357_01/coh.360/e15723/api_cq.htm#CEGDBJBI