Just setup 8 VMS, and load it with the DSE 2.0, one new feature of the DSE 2.0 is the SOLR support. Now, when I run the node tool, you will see it create one virtual data center called Solr besides the Analytics for hadoop.

Ops Center has a visual layout, 3 virtual datacenters.

Now let’s test the first case, Loading one CSV file to the CassandraFS (like the hdfs)

I googled some sample csv file, and picked this one, http://jasperreports.sourceforge.net/sample.reference/csvdatasource/

it has some data like "Dallas",47,"Janet Fuller","445 Upland Pl.","Trial" which maps to{"city", "id", "name", "address", "state"};

So paste this data to a local file called state.csv, and copy it to our cassandraFS

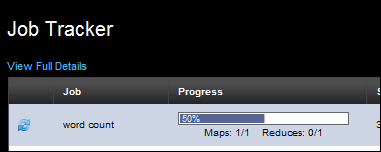

now let’s run a wordcount mapreduce job on the cluaster, powered by cassandra.

you can see the running job from opscenter

once done, we can view the result,

now the data is there, we just create one hive table and do the 1st test

Create Hive Table and Load data from CassandraFS

when you enable the Analytics role, those node will works like a hadoop node,So I can run this test in any node which is allocated to Analytics Datacenter zone.

run dsetool to identify the current jt node,

then just run dse hive, you will be in the hive CLI shell,

then load data from cassandrafs to hive using the hive standard syntax, then, the data is there,

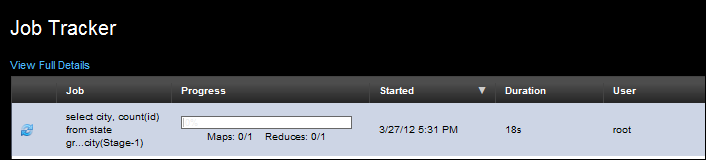

then we can just write some query run a grouping by city,

from the opscenter, you can see the hadoop job is submitted to the jt,

once done , you will get the result like this, which is no surprise at all

the wraps the first test case, Load data to CassandraFS, and create hive table, then load data from cassandraFS and run a query.

Then Let’s create another case, LIke the Hbase-hive handler, we create one Hive table which maps to Cassandra ColumnFamily. then we can save the query result to this hive table powered by Cassandra CFS data.

No comments:

Post a Comment