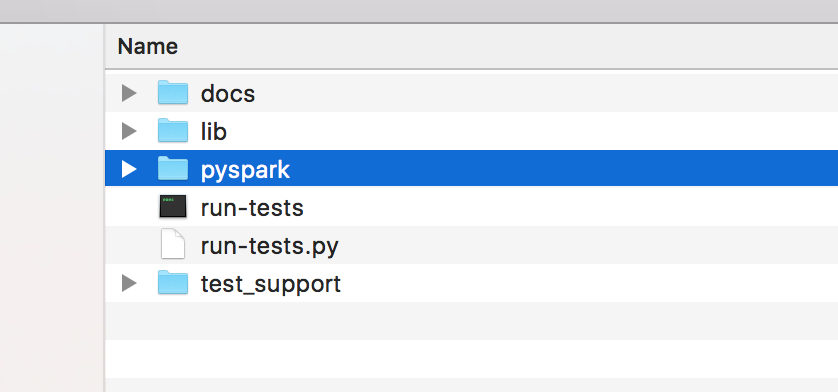

1. copy and grab pyspark folder under the standard spark distribution to your project folder

2. setup some bootstrap to take care the environments using the following code , I use 1.6.1 as an example. and you may create this as a module.

class Setup(object): def setupSpark(self): os.environ["SPARK_HOME"] = "/Users/and/Development/spark/spark-1.6.1-bin-hadoop2.6/" os.environ["PYSPARK_SUBMIT_ARGS"]="--master local[2]"; spark_home = os.environ.get("SPARK_HOME") spark_release_file = spark_home + "/RELEASE" if os.path.exists(spark_release_file) and "Spark 1.6.1" in open(spark_release_file).read(): pyspark_submit_args = os.environ.get("PYSPARK_SUBMIT_ARGS", "") if not "pyspark-shell" in pyspark_submit_args: pyspark_submit_args += " pyspark-shell" os.environ["PYSPARK_SUBMIT_ARGS"] = pyspark_submit_args sys.path.insert(0, spark_home + "/python") sys.path.insert(0, os.path.join(spark_home, "python/lib/py4j-0.9-src.zip")) return pyspark.SparkContext()

3. you are good to go

from lib.setup import Setup sc=Setup().setupSpark() print sc.parallelize(range(1,10)).count()

7 comments:

wireless setup steps for Windows

Go to the HP support webpage 123.hp.com/ljprom15w Setup

Key the model number of the 123.hp.com/ljprom15w and click Search

Now, in the next page, Install printer software, tap download

The download will commence and will be over in some time

Once the download is over, open the fully downloaded file

Navigate the on-screen prompts and select the right options

Eventually, click ‘next’ and the Windows driver download will finish

To know more on the HP LaserJet Pro M15W Wireless Setup, dial us @ +1-888-214-1820

Crackle, the most entertaining channel is now on Roku to entertain you. If you are new to this channel, use the page crackle.com/activate. Once if you activate the channel, the most interesting program collections are on its way. The categories include full-length movies, TV shows, and documentaries and much more.

It’s always my passion to write creative articles. Have worked on a lot of innovative and interesting topics. Read it and it’s informative. Recommend you to provide your feedback & suggestions to work on more titles. Contact me or reach me if you would like to know more about my profile.

My work:Pureflix on Roku

Hey, This is me Marvin Ericksen writing from Florida. So how’s life going-on, Feeling Enthusiastic or feeling dull. I felt bored with my life. So here I am and going to write my blogs. Actually I am really interested in reading and writing. But my all-time hobbies are movies and TV Shows and Series.

My blog: cbs.com/roku

Hi this is SaiVijay, I'am from Chennai. I'am a technical writer for a digital marketing company in Chennai for more than five years. And its my own passion to choose this field. I have to write a creative articles, novels, documents. My hobbies are drawing, playing foot ball and playing cricket also. My most favorite one is "Be Tvastra" which one is the most memorable one in my works. I have suggest you to read the articles.

Digital Marketing Company in Chennai

Digital Marketing Agency in Chennai

SEO services in Chennai

SEO company in Chennai

Web Design Company in Chennai

Web Development Company in Chennai

top 10 digital marketing companies in chennai

digital marketing companies in chennai

best digital marketing agency in chennai

digital marketing companies in anna nagar

seo services company in chennai

Best SEO Companies In Chennai

cheap seo in chennai

Great Information sharing .. I am very happy to read this article .. thanks for giving us go through info.Fantastic nice. I appreciate this post

Here is sharing Adobe Analytics related stuff may be its helpful to you.

Adobe Analytics Training

Great Information sharing .. I am very happy to read this article .. thanks for giving us go through info.Fantastic nice. I appreciate this post

Here is sharing Generative AI information that may be helpful to you.

Generative AI

Post a Comment