this a very weird error when I try to run a simple window ranking, all looks good from the syntax perspective.

team=[("Lakers","WEST",29 ),("Golden State","WEST",89 ),

("MIA HEAT","EAST",79 ),("SAS","WEST",9 ),

("RAPTORS","EAST",29 ) ]

sql.createDataFrame(

sc.parallelize(

team).map(lambda x: Row(Team=x[0],Division=x[1], Score=x[2])))\

.registerAsTable("team")

print sql.sql("SELECT team, division, score, rank() OVER (PARTITION BY division ORDER BY score desc)" " as rank FROM team").take(10)

And I got this errors complaining the syntax

4j.protocol.Py4JJavaError: An error occurred while calling o36.sql.

: java.lang.RuntimeException: [1.43] failure: ``union'' expected but `(' found

SELECT team, division, score, rank() OVER (PARTITION BY division ORDER BY score desc) as rank FROM team

^

at scala.sys.package$.error(package.scala:27)

at org.apache.spark.sql.catalyst.AbstractSparkSQLParser.parse(AbstractSparkSQLParser.scala:36)

at org.apache.spark.sql.catalyst.DefaultParserDialect.parse(ParserDialect.scala:67)

at org.apache.spark.sql.SQLContext$$anonfun$2.apply(SQLContext.scala:211)

at org.apache.spark.sql.SQLContext$$anonfun$2.apply(SQLContext.scala:211)

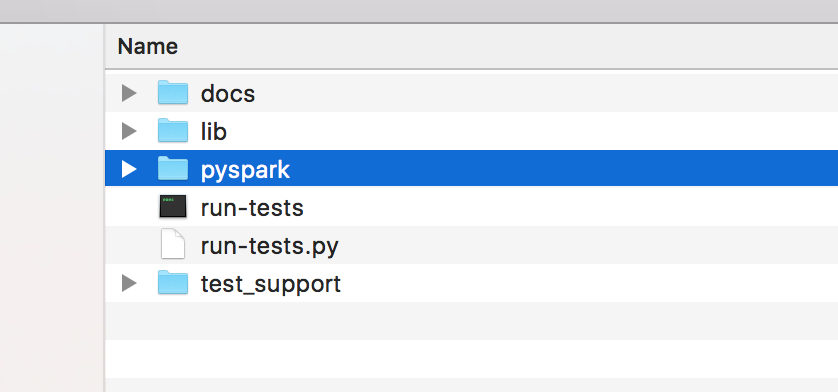

To Fix this, please make sure you are using HiveContext instead of SqlContext