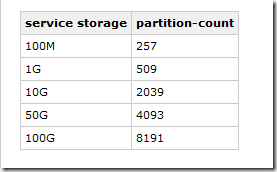

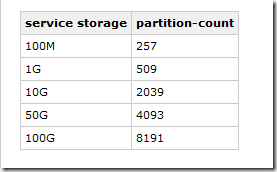

If you use the distributed cache scheme of coherence cluster, all the data will be distributed to storage nodes. the partition-count is based on the data size. by default is 257 for data less than 100M.

the partition-count is a setting in the cache-scheme , you can always override the partition-count.

here I just changed the partition-count to 13, and setup backup count to 1. (each copped data will have one backup.)

then hook up the btrace script to any node.

| @OnMethod(clazz = "com.tangosol.coherence.component.util.daemon.queueProcessor.service.grid.DistributedCache", method = "getStorageAssignments",

location=@Location(value=Kind.RETURN))

public static void getStorageAssignments(@Return int [][] assignment) {

println("getStorageAssignments");

print("Key \t0\t Primary "); print(assignment[0][0]); print("\t Backup "); println(assignment[0][1]);

print("Key \t1\t Primary "); print(assignment[1][0]); print("\t Backup "); println(assignment[1][1]);

print("Key \t2\t Primary "); print(assignment[2][0]); print("\t Backup "); println(assignment[2][1]);

print("Key \t3\t Primary "); print(assignment[3][0]); print("\t Backup "); println(assignment[3][1]);

print("Key \t4\t Primary "); print(assignment[4][0]); print("\t Backup "); println(assignment[4][1]);

print("Key \t5\t Primary "); print(assignment[5][0]); print("\t Backup "); println(assignment[5][1]);

print("Key \t6\t Primary "); print(assignment[6][0]); print("\t Backup "); println(assignment[6][1]);

print("Key \t7\t Primary "); print(assignment[7][0]); print("\t Backup "); println(assignment[7][1]);

print("Key \t8\t Primary "); print(assignment[8][0]); print("\t Backup "); println(assignment[8][1]);

print("Key \t9\t Primary "); print(assignment[9][0]); print("\t Backup "); println(assignment[9][1]);

print("Key \t10\t Primary "); print(assignment[10][0]); print("\t Backup "); println(assignment[10][1]);

print("Key \t11\t Primary "); print(assignment[11][0]); print("\t Backup "); println(assignment[11][1]);

print("Key \t12\t Primary "); print(assignment[12][0]); print("\t Backup "); println(assignment[12][1]); } |

Let’s start with one proxy node and Two Storage Nodes first.

here is the partition output. (key is 0-12). Node 1 is the proxy Node, 3 and 2 are the storage node. they just keep the backup data of the others

| getStorageAssignments

Key 0 Primary 3 Backup 2

Key 1 Primary 3 Backup 2

Key 2 Primary 3 Backup 2

Key 3 Primary 3 Backup 2

Key 4 Primary 3 Backup 2

Key 5 Primary 3 Backup 2

Key 6 Primary 2 Backup 3

Key 7 Primary 2 Backup 3

Key 8 Primary 2 Backup 3

Key 9 Primary 2 Backup 3

Key 10 Primary 2 Backup 3

Key 11 Primary 2 Backup 3

Key 12 Primary 2 Backup 3 |

If I add one more Storage nodes with ID=5. the New data node will take care some data. ( this will make sure each data node hold equal pieces of data. )

| Key 0 Primary 5 Backup 2

Key 1 Primary 3 Backup 5

Key 2 Primary 5 Backup 2

Key 3 Primary 3 Backup 5

Key 4 Primary 3 Backup 2

Key 5 Primary 3 Backup 2

Key 6 Primary 5 Backup 3

Key 7 Primary 5 Backup 3

Key 8 Primary 2 Backup 5

Key 9 Primary 2 Backup 5

Key 10 Primary 2 Backup 3

Key 11 Primary 2 Backup 3

Key 12 Primary 2 Backup 3 |

this partition table is shared by all Nodes. (proxy node and storage node.), So when proxy get one put/get request, It will run the partition logic first to locate the primary node member to hold the data. then dispatch the request to that node for storage or query.

Proxy get key bucket

Storage Node, store the data, and maintain keyindex

proxy node will query the partition everytime it get one request to put data.

Storage nodes keep exchanging those information.

No comments:

Post a Comment