DRBD is a Linux Kernel module that constitutes a distributed storage system. You can

use DRBD to share block devices between Linux servers and, in turn, share file systems and data.

in a nutshell, Data change on Master Node will be synced to Slave node in the real time mostly. changes on master will be captured on the kernel level, then push the change to slave node and get network ack when nodes are connected. If network is broken, the data change will be queued locally and flush to remote node when it get online.

Here , I will install and configure a two nodes cluster using two centos vm. we need make sure both VM are network-reachable. then just install, make and config steps.

Let’s say I have two Nodes, they are Home and LA. I will start the setup from Home

On Both Nodes, expand the Disk to add more space and create a new partition hda4 with the same size (not required.)

expand the disk space , here i will expend 1G more space.

then create the partition hda4

Also make sure each node has two NIC card for failover.

Now, we will use a GUI tool to install and config the drbd cluster, it’s called DRBD-MC which is a standard java application. It will call yum to install the drbd and push the change the those conf files when user click the gui.

Download the jar file from http://oss.linbit.com/drbd-mc/, drbc-mc.jar and run the jar file on master node.

java –jar ~/Desktop/drmc-*.jar

the main screen ,

Click the Host wizard and add one master node called Home and enter the root password.

here is the sweet set of the tool, it prerequisites component is missing, just click install to bring it in.

here is the generated script to install pacemaker

| wget -N -nd -P /etc/yum.repos.d/ http://www.clusterlabs.org/rpm/epel-5/clusterlabs.repo && rpm -Uvh http://download.fedora.redhat.com/pub/epel/5/i386/epel-release-5-4.noarch.rpm ; yum -y -x resource-agents-3.* -x openais-1.* -x openais-O.9* -x heartbeat-2.1.* install pacemaker.x86_64 heartbeat.x86_64 && /sbin/chkconfig --add heartbeat |

after install drbd and necessary dependency component like pacemaker, click Cluster wizard to create a cluster. I will name it helloworld

And select two available hosts

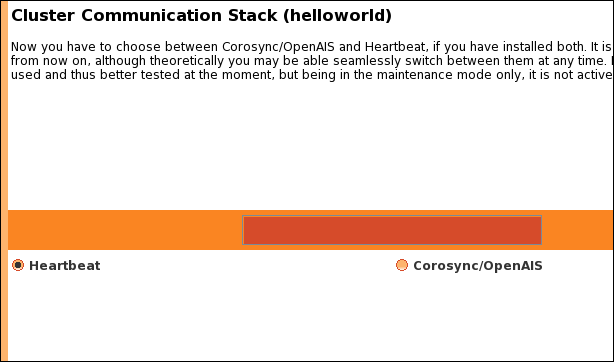

chose the default communication protocol.

Create the create config button to generate the conf file

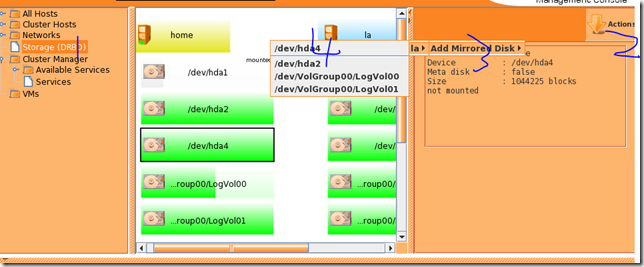

after done, click the storge manu, chose the hda4 partition, click the action manu to select the LA mirror device

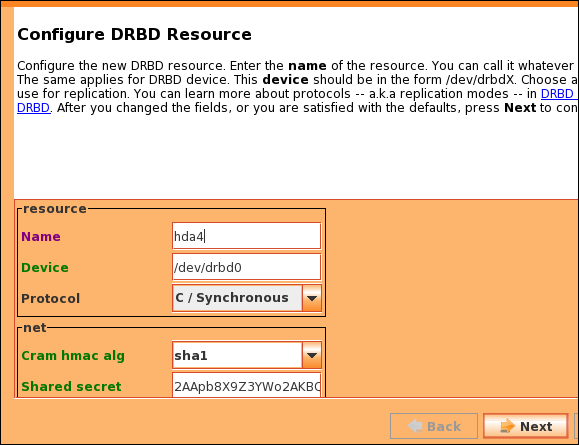

give it a resource name hda4

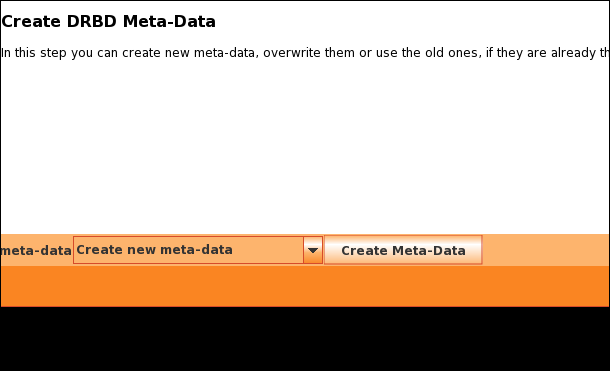

click next then you need to create a metadata, here we will keep the meta data into the device itself

then chose Home as the primary node, and create a ext3 filesystem

Now the device will be synced from home node to la node.

you can also run service drbd status. or just cat /porc/drbd

from the secondary node, you can tell its role is secondary.

then wait for the sync to be done.

Now, we can mount the /dev/drbd0 device to a file system.

mkdir /mnt/drbd/hda4

mount /dev/drbd0 /mnt/drbd/hda4

then every change to /mnt/drbd/hda4 folder will be synced to secondary node.

Please note here the secondary is read-only mode, you can’t mount the system unless you switch the role to primary node.

let’s create some file on master first.

touch helloworld.master

then on the primary node, bring down it’s interface to simulate a network error.

ifdown eth0

ifdown eth1

and on the slave node, change its role to primary.

the cluster is in disconnected state, let’s switch secondary node to take over the primary role

drbdadm primary hda4, now secondary has the primary role.

mount /dev/drbd0 /mnt/drbd/hda4

then we can mount the device and see the helloworld.master is there.

As I say, drbd is one low level service to enable block level replication. we can use this mechanism to replicate the DB binary log of mysql or wal log of postgres , then we can get some level of High availability.

If we want to switch back the role ( now LA is the primary node, Home is unknow. )

one LA Node. (invalidate the primary role.)

On Home Node , just turn on the network service, ifup eth0, ifup eth1. and run “drbdadm connect hda4”

all set, role switched back to initial setting. Home is Primary, La is secondary.

Hope it helps.

1 comment:

Great article. It was really helpful

I am still trying to get a hang of this set up.

What should be the recommended size of the lower-level storage partition?

thanks

Post a Comment