Mahout and Hadoop are all java libraries basically, mahout use the Maven tool to build the source code and maintain the dependency.

So we need make sure we have the following bits ready.

- JDK

- Maven

- Hadoop

- Mahout

I will start from the fresh centos, then get all those stuff ready step by step.

install JDK.

GO to Oracle JDK download site, http://www.oracle.com/technetwork/java/javase/downloads/index.html, I still prefer the Java 6 instead of 7, pickup one the .bin link, download and run it directly. I will put the java under /usr/lib/jdk6 folder.

Export the bin directory to PATH, and jdk6 to JAVA_HOME environment variable.

Install MAVEN

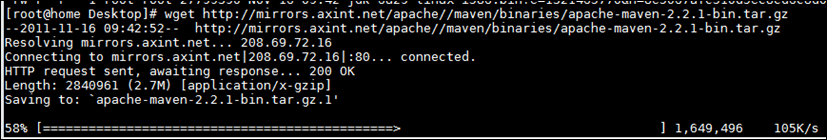

Download the binary package from http://maven.apache.org/download.html, here I chose the 2.2.1 version which is more stable.

Extract the zip file, and link it to /usr/lib/maven, then.

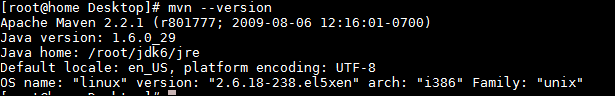

Export /usr/lib/maven to the PATH. now, you can run mvn –version, to make sure it works at least we can get the version,

For settings like proxy, check it out here, http://maven.apache.org/download.html#Maven_Documentation

Install HADOOP.

you can check this out, if you want to install hadoop as a fully distributd mode, How to: install and config hadoop to run in fully distributed Mode, Centos.

here we just have one vm, so keep it easy for the mahout testing. I will use the Cloudera distribution,

Download the repo file for centos 5, http://archive.cloudera.com/redhat/cdh/cloudera-cdh3.repo and copy it to yum repo directory.

yum install hadoop-0.20-conf-pseudo

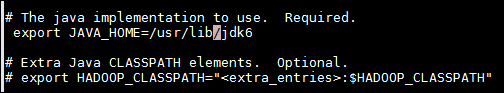

once done, go to /usr/lib/hadoop/conf directory, change the java home to /usr/lib/jdk6 in hadoop-env.sh

Then run as hdfs, format the namenode,

then start those daemons like /etc/init.d/hadoop-*, run JPS, you should see all the java process there,

now we can run a simple test, go the /usr/lib/hadoop, run

we can just copy one file there,

open a browser, go to http://localhost:50070, you can see the file we just uploaded is there,

Now , HDFS is ready. we can run a mapreduce job to make sure hadoop is ready.

If no error, we are all set, hadoop is ready.

Install Mahout.

Download one source code, you can use svn to clone one trunk copy,

svn co http://svn.apache.org/repos/asf/mahout/trunk

and copy this code to /usr/lib/mahout

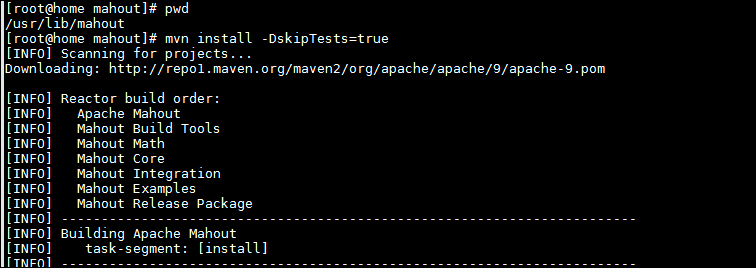

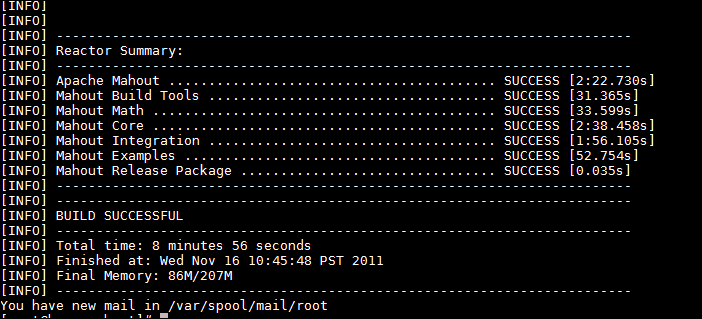

then run mvn install –DskipTests to compile the source, mvn will figure out the dependency and fetch those jars for you automatically,

it takes time to download all the jar, mileage depends.

Here is my MPG, take several minutes,

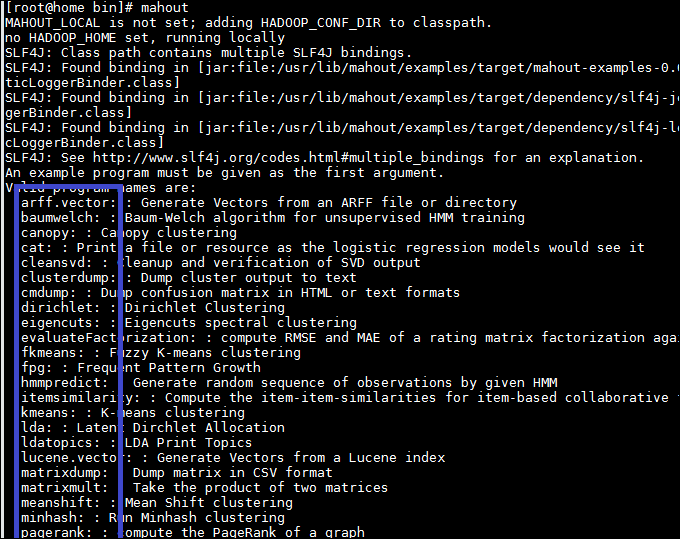

Now, export /usr/lib/mahout/bin to PATH , then we can run mahout from the shell.

If you cant exectute the mahout, give it one execute permission.

run mahout, will list all the options to go with different algorithms.

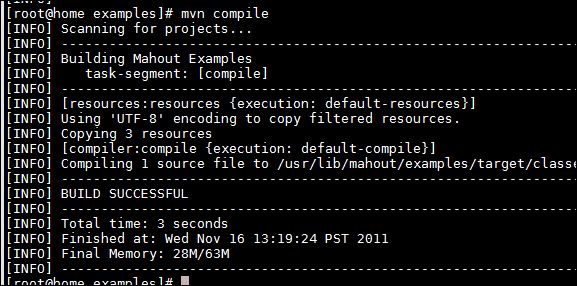

Then go the examples folder, run mvn compile

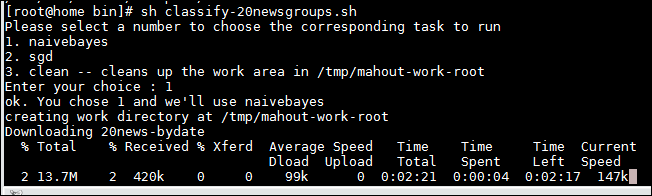

Now, you can run some example like the one to classify the news groups.

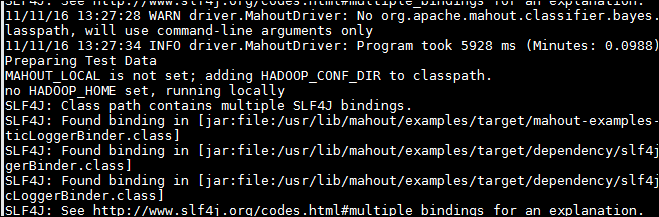

Here we didn’t sepecify the HADOOP_HOME, so it will run locally. the shell script will download data, prepare the data, then run the classifier.

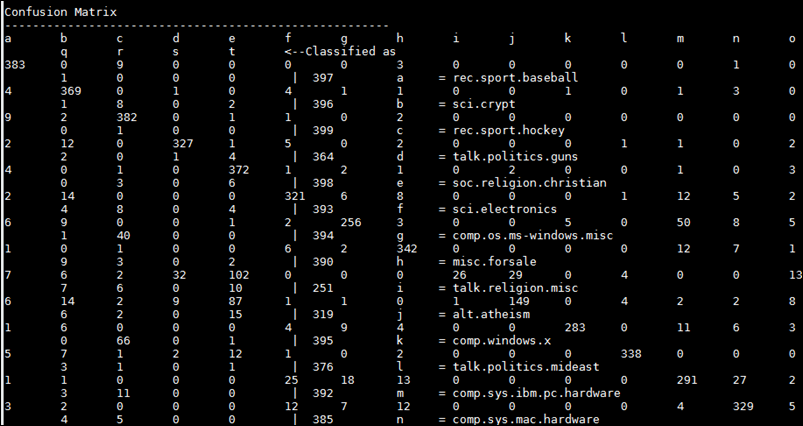

when done, it will show the confusion matrix against the testing data.

121 comments:

vry nice blog..............actually

I have many queries....

1. how to export /usr/lib/mahout/bin to PATH

2. m done with installation by following ur blog...almost everything went fine bt getting error for the command "sh classify-20newsgroups.sh"

sh: classify-20newsgroups.sh: No such file or directory

m new to this so nt getting what is this.....

3. is it necessary to run every command through "root"????

thnx a lot :)

i am getting the following error

[INFO] Reactor Summary:

[INFO]

[INFO] Mahout Build Tools ................................ SUCCESS [3.160s]

[INFO] Apache Mahout ..................................... SUCCESS [0.934s]

[INFO] Mahout Math ....................................... SUCCESS [48.081s]

[INFO] Mahout Core ....................................... SUCCESS [1:02.933s]

[INFO] Mahout Integration ................................ SUCCESS [2:49.722s]

[INFO] Mahout Examples ................................... SUCCESS [2:01.534s]

[INFO] Mahout Release Package ............................ SUCCESS [0.092s]

[INFO] Mahout Math/Scala wrappers ........................ FAILURE [2:03.532s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 8:50.657s

[INFO] Finished at: Fri Sep 05 19:35:01 IST 2014

[INFO] Final Memory: 55M/312M

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal on project mahout-math-scala: Could not resolve dependencies for project org.apache.mahout:mahout-math-scala:jar:0.9: Could not transfer artifact org.scalatest:scalatest_2.9.2:jar:1.9.1 from/to central (http://repo.maven.apache.org/maven2): GET request of: org/scalatest/scalatest_2.9.2/1.9.1/scalatest_2.9.2-1.9.1.jar from central failed: Connection reset -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn -rf :mahout-math-scala

Akila Siriweera

Interesting RARE & worth article, works fine.

Thanks for the creator.

Note : http://svn.apache.org/repos/asf/mahout/trunk

It needs to check out whole contained of the folder.

Thumbs up....

hi when i tried to run a example sh, the data is getting downloaded in the tmp/mahout-work-root/20news-all.

But i am getting an error,

put: `/tmp/mahout-work-root/20news-all': No such file or directory

Kindly help me out

thank u so much in advance

useful blog... i learnt more information about mahout from this blog.. thank you for sharing

hadoop training institute in adyar | big data training institute in adyar | hadoop training in chennai adyar | big data training in chennai adyar

After reading this blog i very strong in this topics and this blog really helpful to all... explanation are very clear so very easy to understand... thanks a lot for sharing this blog

hadoop training in chennai adyar | big data training in chennai adyar

Nice post. By reading your blog, i get inspired and this provides some useful information. Thank you for posting this exclusive post for our vision.

Blueprism training in velachery

Blueprism training in marathahalli

AWS Training in chennai

Really great post, I simply unearthed your site and needed to say that I have truly appreciated perusing your blog entries. I want to say thanks for great sharing.

java training in chennai | java training in bangalore

java online training | java training in pune

You got an extremely helpful website I actually have been here reading for regarding an hour. I’m an initiate and your success is incredibly a lot of a concept on behalf of me.

Data Science training in kalyan nagar | Data Science training in OMR

Data Science training in chennai | Data science training in velachery

Data science training in tambaram | Data science training in jaya nagar

Useful information.I am actual blessed to read this article.thanks for giving us this advantageous information.I acknowledge this post.and I would like bookmark this post.Thanks

angularjs Training in chennai

angularjs Training in chennai

angularjs-Training in tambaram

angularjs-Training in sholinganallur

angularjs-Training in velachery

Its a wonderful post and very helpful, thanks for all this information. You are including better information regarding this topic in an effective way. T hank you so much.

Cloud computing Training in Chennai

Hadoop Training in Chennai

Cloud computing Training centers in Chennai

Cloud computing Training institutes in Chennai

Big Data Course in Chennai

Big Data Hadoop Training in Chennai

Thanks for sharing this installation steps admin, it is really helpful.

Azure Training in Chennai

Microsoft Azure Training

Azure Training center in Chennai

AWS Training in Chennai

Robotics Process Automation Training in Chennai

DevOps Training in Chennai

Nice blog. Can't be written much better. You’re doing a great job. Keep continuing.

French Class in Mulund

French Coaching in Mulund

French Classes in Mulund East

French Language Classes in Mulund

French Training in Mulund

French Coaching Classes in Mulund

French Classes in Mulund West

Thanks for taking time to share this java concepts admin, I learned a lot from your blog. Keep sharing more like this.

DevOps Training in Chennai

DevOps course in Chennai

DevOps course

Best DevOps Training in Chennai

AWS Training in Chennai

AWS Training in Velachery

Thank you for sharing such a great concept. I got more knowledge from this blog. Your site was amazing. Keep update on some more details...

Blue Prism Training Bangalore

Blue Prism Classes in Bangalore

Blue Prism Training Centers in Bangalore

Blue Prism Institute in Bangalore

Blue Prism Training in Perambur

Blue Prism Training in Nolambur

Amazing Post . Thanks for sharing. Your style of writing is very unique. Pls keep on updating.

Spoken English Classes in Chennai

Best Spoken English Classes in Chennai

Spoken English Class in Chennai

Spoken English in Chennai

Best Spoken English Class in Chennai

English Coaching Classes in Chennai

Thanks for sharing,this blog makes me to learn new thinks.

interesting to read and understand.keep updating it.

Article submission sites

Education

Selenium Training in Chennai

Selenium Training

iOS Training in Chennai

Digital Marketing Training in Chennai

core java training in chennai

german classes

german language in chennai

german classes chennai

Really the blog which you have shared is more informative to us. Thanks for your information.

Tally Classes in Coimbatore

Tally Course in Coimbatore

Tally Training Institute

Best Tally Course

Tally Training Coimbatore

Nice article I was really impressed by seeing this blog, it was very interesting and it is very useful for me.

Javascript Training in Bangalore

Java script Training in Bangalore

Java Coaching Institutes in Bangalore

Advanced Java Training Institute in Bangalore

Good written and great info. Thank you for taking the time to provide us with your valuable information. Please Keep it up...

Best CCNA Training Institute in Bangalore

CCNA Certification in Bangalore

CCNA Training Bangalore

CCNA Training institutes in Bangalore

CCNA Training in Tnagar

CCNA Course in Vadapalani

CCNA Training in Chennai Kodambakkam

CCNA Training in Chennai

Wonderful blog!!! Thanks for your information… Waiting for your upcoming data.

Ethical Hacking Course in Coimbatore

Hacking Course in Coimbatore

Ethical Hacking Training in Coimbatore

Ethical Hacking Training Institute in Coimbatore

Ethical Hacking Training

Ethical Hacking Course

I am feeling great to read this.you gave a nice info for us.

please update more.

angularjs classes in bangalore

angularjs tutorial in bangalore

Best AngularJS Training Institute in Anna nagar

AngularJS Certification Training in T nagar

Great blog!!! It was very impressed to me. I like so much and keep sharing. Thank you.

Robotics Courses in Bangalore

Automation Courses in Bangalore

RPA Courses in Bangalore

Robotics Classes in Bangalore

Robotics Training in Bangalore

Automation Training in Bangalore

Thanks for sharing,this blog makes me to learn new thinks.

interesting to read and understand.keep updating it.

Java Training in Sholinganallur

Java Institute in T nagar

Java Training in Chennai Anna Nagar

core Java Training in Bangalore

I am really enjoying reading your well written articles.

It looks like you spend a lot of effort and time on your blog.

php training in bangalore

php course in bangalore

Best Web Designing Training Institute in Bangalore

Website Development Course in Bangalore

such a valuable blog!!! thanks for the post.

Selenium Training in Chennai

selenium testing training in chennai

iOS Training in Chennai

French Classes in Chennai

Big Data Training in Chennai

cloud computing certification courses in chennai

best cloud computing training institutes in chennai

Thanks for sharing this valuable information.Its more useful to us.its very interesting to know the blog with clear vision.

linuxhacks

Technology

Your blog is really good. I feel very good to read and useful information as updated more, keep blogging......

Machine Learning Training in Velachery

Machine Learning Training in Chennai Velachery

Machine Learning Course in Tnagar

Machine Learning Training in Nungambakkam

Machine Learning Course in Saidapet

Machine Learning Training in Aminjikarai

Thanks for the great article this is very useful info thanks for the wornderful post,

custom software development company.

Thanks for the great article this is very useful info thanks for the wornderful post,

custom software development company.

Very nice post here thanks for it .I always like and such a super contents of these post.Excellent and very cool idea and great content of different kinds of the valuable information's.

machine learning with python course in Chennai

top institutes for machine learning in chennai

I love your way of writing. The content shows your in-depth knowledge on the subject. Thanks for Sharing.

Node JS Training in Chennai

Node JS Course in Chennai

Node JS Advanced Training

Node JS Training Institute in chennai

Node JS Course

IELTS coaching in Chennai

IELTS Training in Chennai

SAS Training in Chennai

SAS Course in Chennai

Given so much info in it, The list of your blogs are very helpful for those who want to learn more interesting facts. Keeps the users interest in the website, and keep on sharing more

Our Credo Systemz Which is designed to offer you OpenStack Training skills required to kick-start your journey as an OpenStack Cloud Administrator.

Please free to call us @ +91 9884412301 / 9600112302

Openstack course training in Chennai | best Openstack course in Chennai | best Openstack certification training in Chennai | Openstack certification course in Chennai | openstack training in chennai omr | openstack training in chennai velachery

Build arbitrary based on docker Hadoop Training in Bangalore is outstanding

Extra-ordinary post. Looking for an information like this for a long time. Thanks for Posting.

Pega training in chennai

Pega course in chennai

Primavera Training in Chennai

Primavera Course in Chennai

IELTS Coaching in Chennai

IELTS Training in Chennai

Nice blog..! I really loved reading through this article. Thanks for sharing such a

amazing post with us and keep blogging... Best React js training near me | React js training online

Thanks for information , This is very useful for me.

Keep sharing Lean Six Sigma Green Belt Training Bangalore

Nice post very good to read

best azure certification training in chennai

Thanks for such a great article here. I was searching for something like this for quite a long time and at last, I’ve found it on your blog. It was definitely interesting for me to read about their market situation nowadays.angularjs best training center in chennai | angularjs training in velachery | angularjs training in chennai | best angularjs training institute in chennai

Your writing is very unique. Amazing post. It is very informative. Thanks for sharing.

Informatica Training in Chennai

Informatica Training Center Chennai

Informatica Training chennai

Informatica Training institutes in Chennai

Best Informatica Training Institute In Chennai

Informatica Training in OMR

Informatica Training in Porur

I really like your blog. You make it interesting to read and entertaining at the same time. I cant wait to read more from you.

Microsoft Azure online training

Selenium online training

Java online training

Python online training

uipath online training

Great Post. Wonderfiul way of sharing the thoughts. Hats-off.

Data Analytics Courses in Chennai

Big Data Analytics Courses in Chennai

Big Data Analytics Training in Chennai

Data Analytics Training in Chennai

Big Data Analytics in Chennai

Data Analytics Courses in Velachery

Data Analytics Courses in T Nagar

I have perused your blog its appealing and noteworthy. I like it your blog.

digital marketing company in chennai,

digital marketing agency in india,

digital marketing company in chennai,

online marketing company in chennai,

digital marketing company in india,

digital marketing services,

digital marketing company,

Very informative piece of article, this blog has helped me to understand the concept even better.

software testing training in chennai | software testing course in chennai | testing courses in chennai | software testing institute in chennai | software testing training institute in chennai | testing courses in chennai with placement | best software testing training institute in chennai | best software testing institute in chennai

Amazing WEbsite

토토사이트

This article was very helpful for my research and I learn more ideas for your excellent post. Thank you and I like you more post in the future...

Tableau Training in Chennai

Tableau Course in Chennai

Advanced Excel Training in Chennai

Corporate Training in Chennai

Embedded System Course Chennai

Linux Training in Chennai

Power BI Training in Chennai

Job Openings in Chennai

Tableau Training in Tambaram

Tableau Training in Adyar

Thank You for an amazing article, it was really helpful for me and loved to read this.

Kumkum Bhagya Upcoming Story and News

yeh ristha kya kehlata hai upcoming story and latest news

Excel Training in Chennai

Excel Advanced course

Unix Training in Chennai

{Placement Training in Chennai

Primavera Training in Chennai

Pega Training in Chennai

Oracle Training in Chennai

Oracle DBA Training in Chennai

JMeter Training in Chennai

Soft Skills Training in Chennai

JMeter Training in Chennai

Yamaha Rx100 modified

mini Bullet 200cc

splendor modified pictures

know about Traffic rules

Pulsar 220 modified

Tvs Radeon full review

Welcome to Biksdna.com clcik here

Thanks for posting keep updating it.

Ionic Training in Chennai

Ionic Training

french courses in chennai

pearson vue

Blockchain Training in Chennai

Spoken English Course in Chennai

Ionic Training in Tambaram

Ionic Training in Adyar

Nice blog...Thanks for sharing informative message ...

Python training in Chennai

Python training in OMR

Python training in Velachery

Python certification training in Chennai

Python training fees in Chennai

Python training with placement in Chennai

Python training in Chennai with Placement

Python course in Chennai

Python Certification course in Chennai

Python online training in Chennai

Python training in Chennai Quora

Best Python Training in Chennai

Best Python training in OMR

Best Python training in Velachery

Best Python course in Chennai

Hi, I like your post really I have read first-time Thanks for sharing keep up the good work.

Very good post I am very thankful to author. This information is helpful for everyone.

Are you looking for the best home elevators in India? Click here the link below: Home elevators | Home lift India

Great post. Thanks for sharing the post please keep sharing the post

Thanks for sharing such a nice blog. I like it. Are you looking for the best home elevators in India? Home elevator India

Nice article

Thanks for sharing the information

Please visit leadmirro to know your blog rank

go to

go to

go to

go to

go to

go to

Very good blog with lots of useful information about amazon web services concepts.

AWS Training in Chennai | AWS Training Institute in Chennai | AWS Training Center in Chennai | Best AWS Training in Chennai

Keep posting

interview-questions/aptitude/permutation-and-combination/how-many-groups-of-6-persons-can-be-formed

tutorials/oracle/oracle-delete

technology/chrome-flags-complete-guide-enhance-browsing-experience/

interview-questions/aptitude/time-and-work/a-alone-can-do-1-4-of-the-work-in-2-days

interview-questions/programming/recursion-and-iteration/integer-a-40-b-35-c-20-d-10-comment-about-the-output-of-the-following-two-statements

nice.

Acceptance is to offer what a

lighted

A reduction of 20 in the price of salt

Power bi resumes

Qdxm:sfyn::uioz:?

If 10^0.3010 = 2, then find the value of log0.125 (125) ?

A dishonest dealer professes to sell his goods at cost price

but still gets 20% profit by using a false weight. what weight does he substitute for a kilogram?

Oops concepts in c# pdf

Resume for bca freshers

Attempt by security transparent method

'webmatrix.webdata.preapplicationstartcode.start()' to access security critical method 'system.web.webpages.razor.webpagerazorhost.addglobalimport(system.string)' failed.

Node js foreach loop

Good Blog..

how to hack with crosh

javascript integer max

apply css to iframe content

given signs signify something and on that basis assume the given statement to be true

zeus learning aptitude paper for software testing

how to hack wifi hotspot on android

she most of her time tomusic

unexpected token o in json at position 1

ywy

javascript sort array of objects by key value

good.............!

dominican republic web hosting

iran hosting

palestinian territory web hosting

panama web hosting

syria hosting

services hosting

afghanistan shared web hosting

andorra web hosting

belarus web hosting

Sach janiye

Mrinalini Sarabhai

Sandeep Maheshwari

dr sarvepalli radhakrishnan

Arun Gawli

Rani Padmini

Sushruta

Harshavardhana

Nanaji Deshmukh

Tansen

I was looking for the innovativedata migration service provider due to which I found your company to be the one.

excellent blogs.....!!!

chile web hosting

colombia web hosting

croatia web hosting

cyprus web hosting

bahrain web hosting

india web hosting

iran web hosting

kazakhstan web hosting

korea web hosting

moldova web hosting

Excellent post...very useful...

python training in chennai

internships in hyderabad for cse 2nd year students

online inplant training

internships for aeronautical engineering students

kaashiv infotech internship review

report of summer internship in c++

cse internships in hyderabad

python internship

internship for civil engineering students in chennai

robotics course in chennai

very good...

internship report on python

free internship in chennai for ece students

free internship for bca

internship for computer science engineering students in india

internships in hyderabad for cse students 2018

electrical companies in hyderabad for internship

internships in chennai for cse students 2019

internships for ece students

inplant training in tcs chennai

internship at chennai

Good information

kaashiv infotech internship fees

internship

internship in chennai for cse students

internships in hyderabad for eee students 2019

inplant training for cse

internship for ece students in coimbatore

internship for cse

internship for cse 3rd year students

inplant training in chennai for ece students

summer training for 3rd year electronics and communication engineering students

hii nyc...good

internships for cse students in bangalore

internship for cse students

industrial training for diploma eee students

internship in chennai for it students

kaashiv infotech in chennai

internship in trichy for ece

inplant training for ece

inplant training in coimbatore for ece

industrial training certificate format for electrical engineering students

internship certificate for mechanical engineering students

This is very good quality article and interesting..& This post has a nice one. share more updates.

aws Training in Bangalore

python Training in Bangalore

hadoop Training in Bangalore

angular js Training in Bangalore

bigdata analytics Training in Bangalore

python Training in Bangalore

aws Training in Bangalore

9x flicks

Hardware and Networking Training in Chennai

CCNA Training in Chennai

AWS Training in Chennai

SAP Training in Chennai

Software Testing Training in Chennai

Java Training in Chennai

SAP Training in Chennai

Kegiatan yang biasanya dilakukan di togel online yaitu melihat kebenaran dalam hasil result togel

Such an exceptionally valuable article. Extremely intriguing to peruse this article. I might want to thank you for the endeavors you had made for

composing this amazing article.

digital marketing blog

digital marketing course fees

seo training in chennai

digital marketing blogs

blog for digital marketing

blog about digital marketing

digital marketing bloggers

digital marketing resources

search engine optimization guide

free search engine optimization tutorials

free SEO tutorials

seo training tutorials

digital marketing tutorials

free digital marketing resources

free SEO

Thanks for sharing such a great information..Its really nice and informative...

sap bi course

You write this post very carefully I think, which is easily understandable to me. Not only this, but another post is also good. As a newbie, this info is really helpful for me. Thanks to you.

CCC Previous Year Question Paper

vry nice blog..............actually

Swachata par Nibandh

Thanks for sharing such a great information..Its really nice and informative..

learn sap ui5

dsf

Good..

coronavirus update

inplant training in chennai

inplant training

inplant training in chennai for cse

inplant training in chennai for ece

inplant training in chennai for eee

inplant training in chennai for mechanical

internship in chennai

online internships

Such an nice and great post.

AngularJS training in chennai | AngularJS training in anna nagar | AngularJS training in omr | AngularJS training in porur | AngularJS training in tambaram | AngularJS training in velachery

Excellent Post..

SAP Training in Chennai | SAP training Institute in Chennai

Informative blog post. Thanks for this wonderful Post.

SAP Training in Chennai

AWS Training in Chennai

Hardware and Networking Training in Chennai

QTP Training in Chennai

CCNA Training in Chennai

Great Blog. Thnaks.

SAP Training in Chennai

Java Training in Chennai

Software Testing Training in Chennai

.Net Training in Chennai

Hardware and Networking Training in Chennai

AWS Training in Chennai

Azure Training in Chennai

Selenium Training in Chennai

QTP Training in Chennai

Android Training in Chennai

In the beginning, I would like to thank you much about this great post. Its very useful and helpful for anyone looking for tips. I like your writing style and I hope you will keep doing this good working.

Oracle Training | Online Course | Certification in chennai | Oracle Training | Online Course | Certification in bangalore | Oracle Training | Online Course | Certification in hyderabad | Oracle Training | Online Course | Certification in pune | Oracle Training | Online Course | Certification in coimbatore

You are including better information regarding this topic in an effective way. thank you so much information.

Python Training in Chennai

Python Training in Bangalore

Python Training in Hyderabad

Python Training in Coimbatore

Python Training

python online training

python flask training

python flask online training

Good written and great info. Thank you for taking the time to provide us with your valuable information. Please Keep it up...

web designing training in chennai

web designing training in omr

digital marketing training in chennai

digital marketing training in omr

rpa training in chennai

rpa training in omr

tally training in chennai

tally training in omr

This is an awesome post. Really very informative and creative contents. These concept is a good way to enhance the knowledge.

sap training in chennai

sap training in tambaram

azure training in chennai

azure training in tambaram

cyber security course in chennai

cyber security course in tambaram

ethical hacking course in chennai

ethical hacking course in tambaram

I always like and such a super contents of these post.Excellent and very cool idea and great content of different kinds of the valuable information's.

sap training in chennai

sap training in annanagar

azure training in chennai

azure training in annanagar

cyber security course in chennai

cyber security course in annanagar

ethical hacking course in chennai

ethical hacking course in annanagar

I am satisfied that you simply shared this helpful information with us. Please stay us informed like this. Thanks for sharing.

sap training in chennai

sap training in annanagar

azure training in chennai

azure training in annanagar

cyber security course in chennai

cyber security course in annanagar

ethical hacking course in chennai

ethical hacking course in annanagar

Outstanding blog post, I have marked your site so ideally I’ll see much more on this subject in the foreseeable future.

oracle training in chennai

oracle training in velachery

oracle dba training in chennai

oracle dba training in velachery

ccna training in chennai

ccna training in velachery

seo training in chennai

seo training in velachery

Thanks for taking the time to discuss this, I feel strongly about it and love learning more on this topic.

Angular js Training in Chennai

Angular js Training in Velachery

Angular js Training in Tambaram

Angular js Training in Porur

Angular js Training in Omr

Angular js Training in Annanagar

This blog is very interesting. I learned so much and want to thank you for sharing it in the first place. It is really helpful for my future endeavors. Thanks for your efforts and making it available to public

Selenium Training in Chennai

Selenium Training in Velachery

Selenium Training in Tambaram

Selenium Training in Porur

Selenium Training in Omr

Selenium Training in Annanagar

thanks for sharing information awesome blog post .

amazon web services aws training in chennai

microsoft azure training in chennai

workday training in chennai

android-training-in chennai

ios training in chennai

Your writing is very unique. Amazing post. It is very informative. Thanks for sharing.

IELTS Coaching in chennai

German Classes in Chennai

GRE Coaching Classes in Chennai

TOEFL Coaching in Chennai

Spoken english classes in chennai | Communication training

Mua vé máy bay tại Aivivu, tham khảo

Lịch bay từ Hàn Quốc về Việt Nam hôm nay

vé máy bay hà nội sài gòn vietjet

vé máy bay cần thơ hà nội bamboo

vé máy bay hải phòng nha trang

vé máy bay đi Huế Vietjet

taxi sân bay nội bài

Wonderful blog.Thanks for sharing such a worthy information...

Digital Marketing Courses in Bangalore

Digital Marketing Course in Pune

Digital Marketing Course in Hyderabad

Digital Marketing Course in Gurgaon

Fantastic blog! Thanks for sharing a very interesting post, I appreciate to blogger for an amazing post.

Devops Training Institute in Pune

Devops Training in Pune

Infycle Technologies, the No.1 software training institute in Chennai offers the No.1 Big Data Hadoop Training in Chennai | Infycle Technologies for students, freshers, and tech professionals. Infycle also offers other professional courses such as DevOps, Artificial Intelligence, Cyber Security, Python, Oracle, Java, Power BI, Selenium Testing, Digital Marketing, Data Science, etc., which will be trained with 200% practical classes. After the completion of training, the trainees will be sent for placement interviews in the top MNC's. Call 7502633633 to get more info and a free demo.

nices information thanku so much

thanku so

click here

Trade Capital Options: TriumphFX Offers Customers The Opportunity To Trade A Wide Array Of Assets And Financial Instruments With A 30p Per Lot Commission.

Great blog.thanks for sharing such a useful information

Salesforce CRM Training in Chennai

https://www.icywheels.com/blogs/1303/5808/the-advantages-of-disposable-shatter-pen

https://everyonezone.com/read-blog/42382

https://blackshare.net/blogs/84230/What-is-a-Shatter-Pen-Shatter-Pen-Definition

Gathered lots of information here, do share more updates.

web designing course in chennai | online internships for civil engineering students | online internship for mechanical engineering | online internship for mba students | online internship for computer science students | online internship for biotech students | internships for ece students | internship for electrical engineering student | internship for ece students

Selenium is a free testing tool that is available for testing web based applications to measure the performance with the expected results. Selenium IDE, Selenium RC, WebDriver and Selenium Grid are main components of the Selenium Course. This is an adaptable and can be fully utilized for testing web applications and systems with the help of the programming languages like Java, c#, Python.

The DevOps training program of MindQ aims at providing knowledge on the concepts of all the DevOps tools that include Git, Ansible, Jenkins, Puppet, Kubernetes, Nagios and Kubernetes. By the end of the course, you will get thorough knowledge on continuous integration, virtualization, configuration management that are various aspects of software development and operations. This is specially designed in a way to make the learners into certified practitioner through the various tasks that will be needed for software development life cycle.

Extremely overall quite fascinating post. I was searching for this sort of data and delighted in perusing this one. Continue posting. A debt of gratitude is in order for sharing.data science training in gwalior

Very Informative article. Thank you for sharing with us.

ramanichandran novels free download

muthulakshmi raghavan novels free download

sashi murali novels free download

tamil novels pdf

srikala tamil novels

mallika manivannan tamil novels

tamil novel writers

Know the Latest Smartphone Price with MobileErBazaar.com totaly free

Some Peopole are want to know Vivo V23e price of bangladesh it's easy to know

visit for know about iqoo 9 se price in bangladesh

To know about AI automated service desk and help desk with rezolve.ai click the link here: https://bit.ly/3t5ygip

quite nice say. I just stumbled upon your weblog and wanted to publicize that i've without a doubt enjoyed browsing your weblog posts. After every sick be subscribing on your feed and that i dream you write inside the same manner as again quickly! Avast Secureline Keys

I cant taking office on focusing long adequate to research; lots less write this rosy of article. Youve outdone your self as quickly as this cloth truely. it's miles one of the greatest contents. Birthday Quotes For Brother

attractive, notice. I simply stumbled upon your blog and wanted to proclaim that i've appreciated browsing your weblog posts. After every one in all proportion of share of, i can really subscribe to your feed, and that i goal you may write once more quickly! Spyhunter Crack

Good Post! Thank you so much for sharing this pretty post, it was so good to read and useful to improve my knowledge as updated one, keep blogging.

mechanical engineering traineeship

online iot course

online course for c programming

cyber security course with job guarantee

electrical engineering inservice training

Great post. keep sharing such a worthy information

Jewellery ERP Software Dubai

Jewellery ERP Software Dubai

Cybersecurity is concerned with preventing unauthorised access to, damage to, or inaccessibility of computer systems. All information assets, whether in hard copy or digital form, are protected by information security, a more general term. To know more about cyber security, join the cyber security course in chennai at FITA Academy.

cyber security course in chennai

Nice blog.

Join our java course in Pune

Many thanks for great post

seo somerset

seo somerset

click here

click here

we are eagerly waiting for your upcoming blogs. Keep sharing.

ca coaching classes in chennai

ca foundation classes in chennai

Post a Comment