Mahout and Hadoop are all java libraries basically, mahout use the Maven tool to build the source code and maintain the dependency.

So we need make sure we have the following bits ready.

- JDK

- Maven

- Hadoop

- Mahout

I will start from the fresh centos, then get all those stuff ready step by step.

install JDK.

GO to Oracle JDK download site, http://www.oracle.com/technetwork/java/javase/downloads/index.html, I still prefer the Java 6 instead of 7, pickup one the .bin link, download and run it directly. I will put the java under /usr/lib/jdk6 folder.

Export the bin directory to PATH, and jdk6 to JAVA_HOME environment variable.

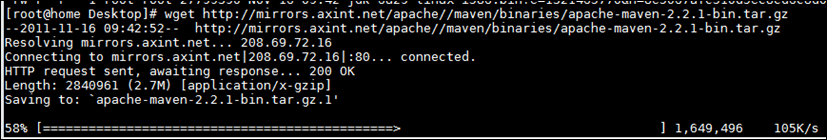

Install MAVEN

Download the binary package from http://maven.apache.org/download.html, here I chose the 2.2.1 version which is more stable.

Extract the zip file, and link it to /usr/lib/maven, then.

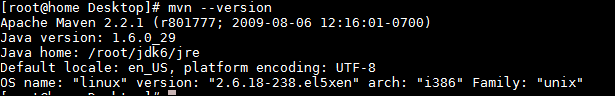

Export /usr/lib/maven to the PATH. now, you can run mvn –version, to make sure it works at least we can get the version,

For settings like proxy, check it out here, http://maven.apache.org/download.html#Maven_Documentation

Install HADOOP.

you can check this out, if you want to install hadoop as a fully distributd mode, How to: install and config hadoop to run in fully distributed Mode, Centos.

here we just have one vm, so keep it easy for the mahout testing. I will use the Cloudera distribution,

Download the repo file for centos 5, http://archive.cloudera.com/redhat/cdh/cloudera-cdh3.repo and copy it to yum repo directory.

yum install hadoop-0.20-conf-pseudo

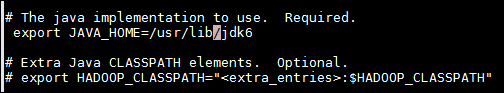

once done, go to /usr/lib/hadoop/conf directory, change the java home to /usr/lib/jdk6 in hadoop-env.sh

Then run as hdfs, format the namenode,

then start those daemons like /etc/init.d/hadoop-*, run JPS, you should see all the java process there,

now we can run a simple test, go the /usr/lib/hadoop, run

we can just copy one file there,

open a browser, go to http://localhost:50070, you can see the file we just uploaded is there,

Now , HDFS is ready. we can run a mapreduce job to make sure hadoop is ready.

If no error, we are all set, hadoop is ready.

Install Mahout.

Download one source code, you can use svn to clone one trunk copy,

svn co http://svn.apache.org/repos/asf/mahout/trunk

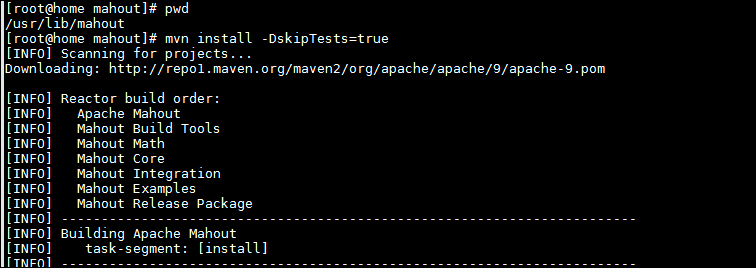

and copy this code to /usr/lib/mahout

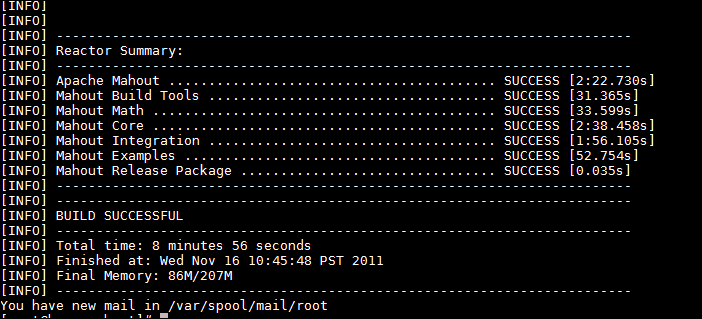

then run mvn install –DskipTests to compile the source, mvn will figure out the dependency and fetch those jars for you automatically,

it takes time to download all the jar, mileage depends.

Here is my MPG, take several minutes,

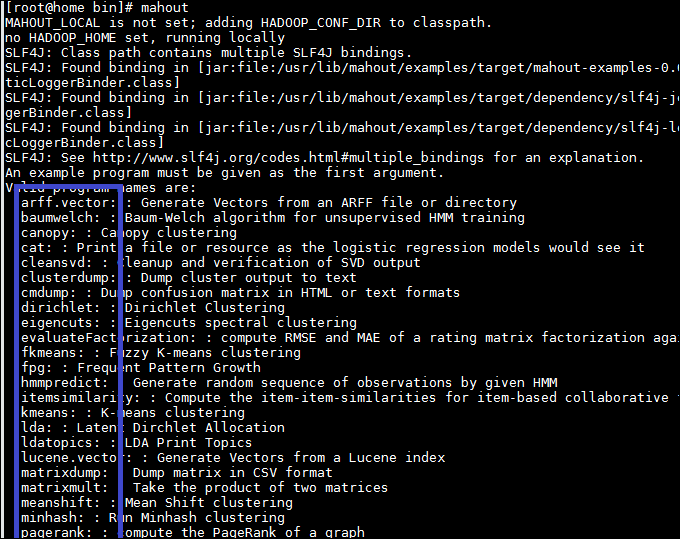

Now, export /usr/lib/mahout/bin to PATH , then we can run mahout from the shell.

If you cant exectute the mahout, give it one execute permission.

run mahout, will list all the options to go with different algorithms.

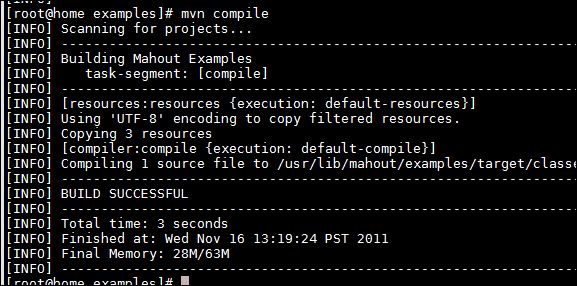

Then go the examples folder, run mvn compile

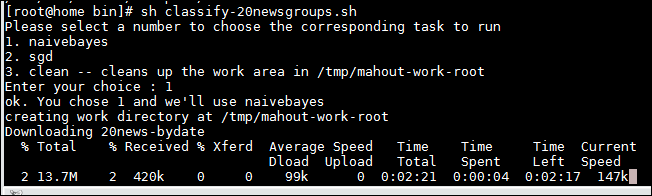

Now, you can run some example like the one to classify the news groups.

Here we didn’t sepecify the HADOOP_HOME, so it will run locally. the shell script will download data, prepare the data, then run the classifier.

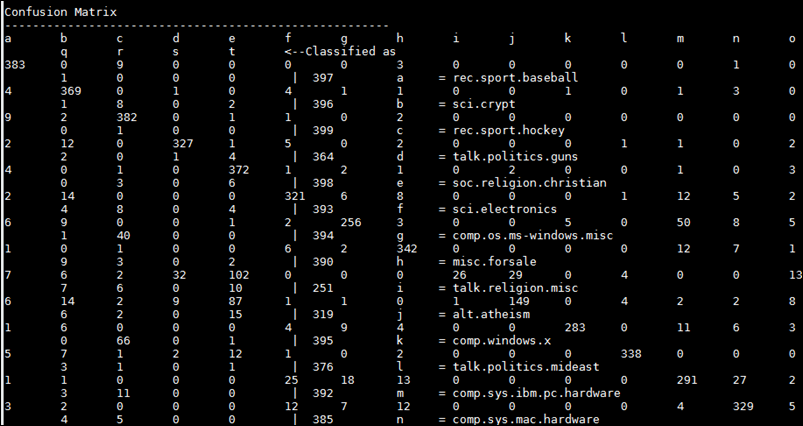

when done, it will show the confusion matrix against the testing data.

122 comments:

Post a Comment