Wednesday, June 27, 2012

Google io 2012, Android 4.1 new features

. Voice typing, offline support. (no data connection required.)

. Accessibility support

. Android Bean (NFC)

send video/photo between phones.

. Notifications.

Actionable notification, display call button for missed call.

Gmail new message, auto expand. swap to remove it.

Like button on the notification bar without openning the app

two finger gesture to expand the phones on pulse.

. Search

Google Now, New UI. knowldege graph

Knowledge graph, like Siri for apple.

Google Now, traffic, public transit. places around you,tell you what's the best food in the given restaurant. Flights information, like delay information. sports score in real time.

PDK

Google Play.

Apps,Movie, Books, Music.

600K apps, 20 B app installs.

Apk Expansion files,

improved analytics

ready to review

android training.

new features,

App Encryption. (encrypted with device-spcified key)

helpfurl for paid application. you cant export apk directly and use it on the fly.

Smart App update.

C2DM->Google cloud messaging.

free for all developers, no quota limitations.

Magazine.

Nexus 7

7 inch tablet. by Asus. loaded with 4.1

view magzine, switch reading modes.

watching tv.

new recommandation engine.

what's the song playing widiget.

chrome, standard browser.

offline maps

Thursday, June 21, 2012

IE: crystal report can’t print, Please wait while the Crystal report print control is loaded

Click the print button for the Crystal report viewer, printing works well on firefox or chrome, but not IE.

you will see the loading screen , maybe forever.

Why? basically, for the crystal report, it has one mode called Activex.

- When the property is set to PrintMode.PDF, the report is exported as a PDF on the Web server, and then streamed to the browser. The user is given the option to print directly to the printer. This option is cross-platform compatible.

- When the property is set to PrintMode.ActiveX, an ActiveX printing control allows users to print the report directly to their local printer.

since only IE supports the activex mode, so the good news is that you can print the report directly to printers, the bad news , for wahtever reason, maybe the user can’t install the activex client.

How to verify you get the correct activex client?

on IE9 or above, check program->add –ons. you can see the add-on by SAP.

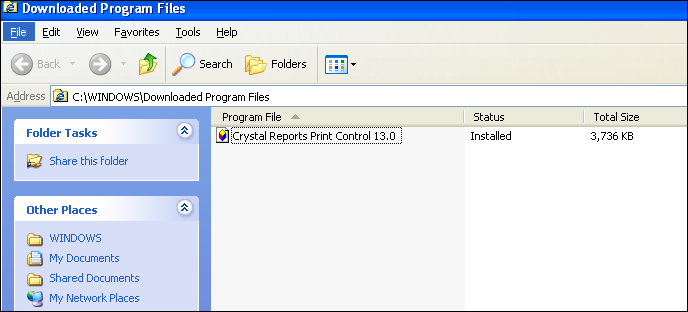

for ie8 and down,check c:\windows\downloaded program files, there should be one like this,

if you didn’t see this , you will see the loading screen there forever,which is studip.

Wednesday, June 20, 2012

JAVA XPATH Examples for C# developers

In C#, you can use XLinq OR XPath, to query the document quickly. and for the xpath, just load one document, then pass the xpath expression to invoke the methods, you get the results.

here is one basic document,

| <?xml version="1.0" encoding="UTF-8"?> |

If we need to get the sum of all items, we can use the xpath as sum(//item/amount)

in C#, here is the code, let’s assume the document are located in order.xml

using xlinq,

or using xpath,

both outputs 310 here,

if use java, code will be more complicated. here is one sample using the DOM and Xpath in java

| DocumentBuilderFactory factory=DocumentBuilderFactory.newInstance(); |

that’s java, more codes to do the same thing compared with C#.

Tuesday, June 12, 2012

Collect varnish log using syslog-ng

Start the varnishd daemon,

then run varnishlog to see the heartbeat checking

run varnishncsa to get the apache like request log if you send some request

if using ab to send a lot request, you will see more log entries without surprise.

add one program to the source list,

then you will see the request log in the destination file.

How-to: Configure and Install Syslog-ng on centos 5 tutorial

Download and grab one version which has too tar files, one is lib called eventlog, another one is syslog.

compile and install eventlog lib which is required by syslog-ng,

once done, you can find the bit under /usr/local/share/pkgconfig

now export the path to the variable, PKG_CONFIG_PATH and configure syslog

export PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

otherwise you get error when you configure the syslog,

then ./configure && make && make install

you can run /usr/local/sbin/syslong-ng –V to show the version information.

By default, syslog-ng.conf is located under /usr/share/etc

then change the conf file as the following, we will parse two sources one is from file, another from the syslog client. we will use nc as the testing client,

| @version: 3.3 destination(f_local); |

touch and put some words in the input file, you can tell the message was dumped to the destination.

test some stuff using nc,

Monday, June 11, 2012

How-to: using talend open studio for big data tutorial-3, write data to hbase

once we get data back by running a hive query, the result is always relatively small, so let’s dump it out to hbase. drag one component called hbaseoutput , and config the zookeeper info. that’s what you need to know about the hbase. ( it will figure out which hmaster is active, and which region server to serve the storage.)

Here table will be mapped to keyspace, and we create one random key, both two columns will be mapped the “profile” column family.

click advanced settings tabl to add this cf to the metadata

7 rows are insterted to the hbase and the table was created by talend also. and data is there!

So we did a simple big data etl job, load data from DB to hdfs, then write hive query to do the analysis, and result is pushed to hbase for application query.

hope this helps.

How-to: using talend open studio for big data tutorial-2, read Hive data

Once the data was loaded to HDFS, let’s create hive external table by using the hive shell.

fist make sure data is there,

Then using the hive shell to create one external table named customers

| CREATE EXTERNAL TABLE customers(id INT, name STRING, |

in order for the talend to connect to the hive, we need to start one hive thrift server.

Now let’s create one flow to read the data in hive. pick up the version ,and the thrift server ip/port, then put a hive query.

once import step, click the edit scheme and just add one column with type as object. then we will parse the result and map to our schema.

Click the advanced tab, to enable the parse query results, using the column we just created as object type.

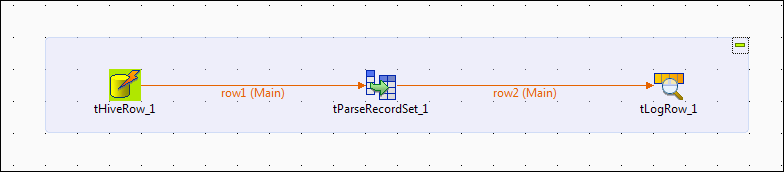

drag the parserecordset component to the surface and conenct the mainout of hiverow to it, click edit schemas to do the mapping.

then match the values,

the the job looks like the following,

if we need to output the row, just put one logrow and connect, the final layout,

Now click to run this job, from the console it tell you whether it has connected to the hive server successfully, if failed, why.

on the hive server, it will show you it receive one query and parse it to mapreduce on the fly,

once done, you can see the results from the run console.

once done, we can export the result to Hbase to enable real-time application query. check it here,

How-to: using talend open studio for big data tutorial-1

Big data has been a hot-topic now, and Talend is very popular in the open source ETL community. however, there was not enough support for big data. recently, talend released a new product with the open source support called Talend Open Studio for Big Data. you can download it and play in your environment, it has built-in support for Big data. I will put several test cases here , then you can get an impression what does the product offer.

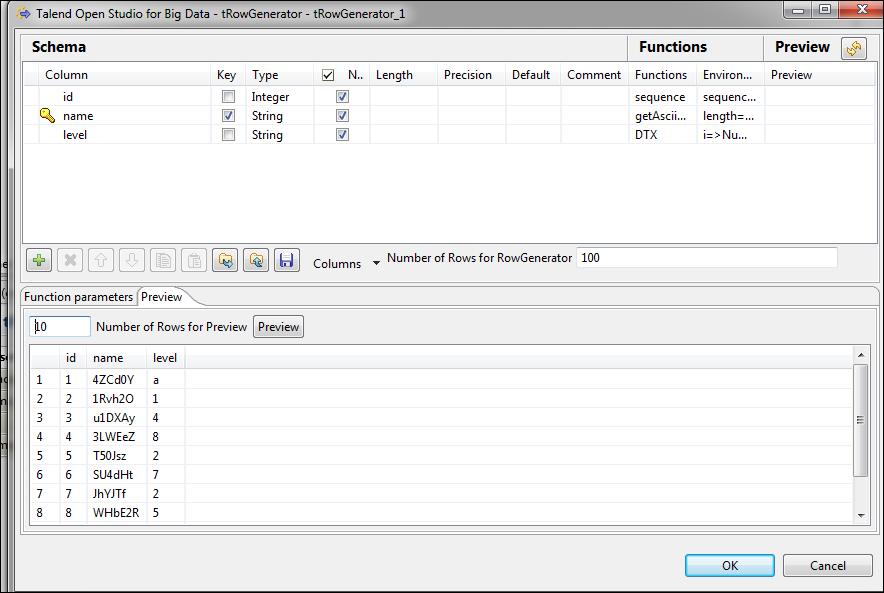

1. FROM DB to HDFSfor the db purpose , I just use the row generator to simulate the rows in db, put some simple logic to create one row serious with 3 columns, ID, name and level,

Then drag and drop the hdfsoutput coponent to the surface, connect the major output of the row generator to the hdfs. for the hdfs, just specify the name node address, and the folder to hold the file

then click to run the job, it will create a file for you which contains all the rows we generated in a CSV format.

remember to pickup the right version of you hadoop environment, and when done, you can tell the time taken to transfer the rows between two systems.

Once we export the data from traditional db to hdfs, we can run hive query to get the results , that’s the next case to test.