Once the data was loaded to HDFS, let’s create hive external table by using the hive shell.

fist make sure data is there,

Then using the hive shell to create one external table named customers

| CREATE EXTERNAL TABLE customers(id INT, name STRING, |

in order for the talend to connect to the hive, we need to start one hive thrift server.

Now let’s create one flow to read the data in hive. pick up the version ,and the thrift server ip/port, then put a hive query.

once import step, click the edit scheme and just add one column with type as object. then we will parse the result and map to our schema.

Click the advanced tab, to enable the parse query results, using the column we just created as object type.

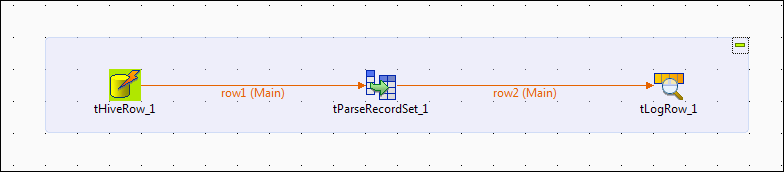

drag the parserecordset component to the surface and conenct the mainout of hiverow to it, click edit schemas to do the mapping.

then match the values,

the the job looks like the following,

if we need to output the row, just put one logrow and connect, the final layout,

Now click to run this job, from the console it tell you whether it has connected to the hive server successfully, if failed, why.

on the hive server, it will show you it receive one query and parse it to mapreduce on the fly,

once done, you can see the results from the run console.

once done, we can export the result to Hbase to enable real-time application query. check it here,

21 comments:

good tutorial!!!

i want to ask about "pick up the version ,and the thrift server ip/port", from where can i do it? i'am hadoop newbie. thank you

Cashless is the new yes, dial cash app customer service for more

Cashless is trending all across the globe. You too should join the chain with no fear. You don’t have to worry about the insecurities of using digital currency. You just have to dial us at our toll-free number and talk to the techie at cash app Customer service and get your problems solved. So, you can easily join the cashless army. Join now.

http://bit.ly/34mXEBq

Transfer money to your bank account from app, call cash app customer service for help

You can easily transfer funds from your cash app to your bank account and get use your money anywhere. If you face any kind of problem while doing this, always remember, the techie sat cash app Customer service are available at your service. They will help you solve all kind of issues. you just have to let them know your problem at our toll-free number .

http://bit.ly/35G8z9g

How To Have Better Knowhow? Dial Cash App Customer Service Number

Do you want to have a better knowledge of cash app? Don’t you know that how to make use of cash app? If yes, then you are not required to take tension. Just grab our

cash app Customer service by dialing our toll-free phone number which can be used by anyone at anytime from anywhere the globe.

http://bit.ly/2KZ1j0p

Gmail Customer Service: An Immediate Aid For Storage Problems

Make use of Gmail Customer Service right now and share the storage related problems with the professionals you have approached through the above mode. Whether it is technical inconvenience or any complex mishap causing storage related hurdles, you will surely resolve all your problems with optimum ease.

Doorstep Gmail Support Service Now Coming At Cost Effective Service Charges

You don’t need to have a financial burden while availing the best in class Gmail Support service as the charge of availing the same service is very low and can be availed at anytime from anywhere. Round the clock availability of such support service will help to have a hassle free experience on Gmail.

Take Gmail Support Service If Unable To Chat On Hangouts

You are not required to take stress or worry about the problems you face when it comes to chatting with any of your friends on Gmail hangouts. Whenever such problems take place, consider taking Gmail Support Service would be the right decision and also provides you with the dependable solution in no time.

Mitigate Complex Security Shortcomings And Loopholes By Using Gmail Phone Number

Do you want to put all Gmail security hurdles aside? For that, you can avail technical support by just making use of Gmail Phone Number and the all your loopholes and shortcomings sorted out within a few seconds. Here, safety tips would also be given so that you could safeguard your Google mail account.

How Does Gmail Help To Create New Label For Emails?

Are you completely unable to create new label for your Google mail account? Are you worried just because of the same? In such a case, you should quickly approach the industrious professionals using Gmail Help and support service and opt for the reliable possible backing in a couple of seconds.

Facebook Help: A Way To Get You Locked Account Unlocked

If your Facebook account has been locked due to various reasons and now you are unable to get the locked account unlocked, you should opt for Facebook Help. For that, you should immediately get in touch with the highly professional geeks who will surely direct you in every possible manner. Read More:- https://www.contact-customerservice.net/contact-facebook-help-center/

Take Proper Assistance For Rectifying ATT Yahoo Mail Login Hurdles

You should make use of such mode of getting the problems solution which can be accessible at anytime from anywhere. If you come across Yahoo Mail Login problems and hurdles, you can get the right solution to it. For the optimum solutions on other Yahoo problems, it is surely the best option.

Read More:- https://www.emailcontacthelp.com/blog/att-yahoo-email-login/

Facebook Help: A Way To Get You Locked Account Unlocked

If your Facebook account has been locked due to various reasons and now you are unable to get the locked account unlocked, you should opt for Facebook Help. For that, you should immediately get in touch with the highly professional geeks who will surely direct you in every possible manner. Read More:- https://www.contact-customerservice.net/contact-facebook-help-center/

You can now check target gift card balance online by just entering the 16-digit gift card number and 3-digit CVV gift card access number and click to check target visa gift card balance.

check target gift card balance

target check balance

check target card balance

target gift card balance

check target balance

target visa gift card balance

check target visa gift card balance

target visa card balance

target gift card balance online

target gift card balance check

check target visa balance

check my target gift card balance

target gift card amount value

target gift balance

target gift card balance check online

target card balance

target check balance

target visa balance

target visa prepaid card balance

mybalancenow target

check mybalancenow

target prepaid visa balance

visa prepaid card balance target

target visa check balance

Check your target visa gift card balance on my gift card online. Each time you use your visa prepaid card balance target, the amount of the purchase is deducted from the available balance on the card.

target visa gift card balance

target visa balance

target visa card balance

check target visa gift card balance

target visa prepaid card balance

mybalancenow target

check mybalancenow

target prepaid visa balance

visa prepaid card balance target

target visa check balance

How do I check best buy gift card balance? In Checkout, enter both the card number and 16-digit Number on the back of the card CVV number and click best buy gift card balance online.

best buy gift card balance online

best buy gift card balance

check best buy gift card balance

best buy card balance

best buy balance check

best buy card balance check

best buy gift card value

check my best buy gift card balance

best buy gift card lookup

best buy gift card amount

best buy gift balance

best buy gift card balance check online

best buy store credit balance

best buy gift card balance online

best buy gift card balance

check best buy gift card balance

best buy card balance

best buy balance check

best buy card balance check

best buy gift card value

check my best buy gift card balance

best buy gift card lookup

best buy gift card amount

best buy gift balance

best buy gift card balance check online

best buy store credit balance

best buy gift card balance online

best buy gift card balance

check best buy gift card balance

best buy card balance

best buy balance check

best buy card balance check

best buy gift card value

check my best buy gift card balance

best buy gift card lookup

Thanks for Shearing post and wonderful, thanks for it again..

paypal customer service

paypal contact number

contact paypal

paypal phone number

paypal customer service uk

paypal contact number uk

paypal resolution center

paypal phone number uk

paypal uk contact

paypal uk contact number

paypal uk

target visa gift card balances

paypal helpline

paypal customer service number

paypal customer service number uk

Thank you for sharing the information its very useful to me.

You Easily check your Jcpenney Sephora gift card balance accepting the any of the following options online, by the phone, in any-store, or through the Sephora to go app. you've got to need just your 16-digit gift card number and 8- Digit PIN located on the back of the card.

For More Details Please Visit Our Official Website:- https://www.sephoragiftcardbalance.com/

Sephora Gift Card Balance

Sephora Gift Card Balance Check

Sephora Card Balance

Sephora Gift Card Check

Sephora Gift Card Amount

Sephora Check Balance

Jcpenney Sephora Gift Card Balance

Sephora Balance

Redeem Sephora Gift Card

Sephora Jcpenney Gift Card Balance

Thank you for sharing in this article

I can learn a lot and could also be a reference

I am happy to find your website and can join to comment

I think is very valuable to be able to read your writing, and on this occasion will I use for my reference source

Thank you so much for sharing, I hope you continue to write spirit next topic.

Website:- https://www.gamestopbalance.com/

Gamestop Gift Card Balance

Gamestop Card Balance

Gamestop Gift Card Balance Check

Gamestop Check Balance

Gamestop Gift Card Balance Inquiry

Check Gamestop Card Balance

Gamestop Balance

Gamestop Gift Card Amount Value

Gamestop Trade Credit Card Balance

Check My Gamestop Gift Card Balance

Fantasy Poewr11: fantasy India best Online Ludo Game on fantasy ludo best app, you can join now and know how to earn ludo cash. Get a chance to win real money.

He can solve your all life problems with a power of astrology. So if you are solve your problems then contact to astrologer pandit pankaj shastri ji..

If you are facing problem then you must check this website

Best vashikaran specialist in guwahati

In Tutorial 2 of the Talend Open Studio for Big Data series, the focus shifts to an essential task: reading Hive data. The tutorial provides a clear and step-by-step guide on harnessing Talend's capabilities to seamlessly integrate with Hive. It adeptly navigates users through the process, emphasizing simplicity and effectiveness. The tutorial ensures a smooth learning curve, making the intricacies of working with Hive data accessible even to beginners. Whether you're a seasoned developer or a newcomer to Talend, this tutorial stands out for its user-friendly approach and practical insights into optimizing Big Data workflows. It's a crucial resource for anyone aiming to master the intricacies of Talend Open Studio for Big Data, particularly in the context of reading and handling Hive data. Most students are drawn to these types of articles and information, but they are unable to prepare for their exams, If you have been struggling with your exams and want assistance, students can pay someone to take my online class - pay someone to take my online class for me and get higher grades on their examinations by providing them with the best available resources, including quality academic services.

Post a Comment